More detailed walkthrough#

See the simple walkthrough for a quicker introduction to the PyDDM package. Here, we will go into a little more depth.

Tip: Before working through this, go through the simple walkthrough.

Importing the necessary modules#

We will ned to import numpy, matplotlib, and xarray. We will also import the PyDDM package.

[1]:

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

import numpy as np #numerical python used for working with arrays, mathematical operations

import xarray as xr #package for labeling and adding metadata to multi-dimensional arrays

import sys

sys.path.append("../../PyDDM") #must point to the PyDDM folder

import ddm_analysis_and_fitting as ddm

Initializing DDM_Analysis class and computing the DDM matrix#

The instance of the DDM_Analysis class we create will need, when initialized, metadata about the images to analyze and the analysis and fitting parameters. This can be done by using a yaml file as shown in the following cell of code (there, the metadata is saved in the file “example_data_silica_beads.yml”. But one can also initialize DDM_Analysis with a dictionary containing the necessary metadata. One way to create such a dictionary and then using it to

initialize DDM_Analysis is shown below.

import yaml

ddm_analysis_parameters_str = """

DataDirectory: 'C:/Users/rmcgorty/Documents/GitHub/DDM-at-USD/ExampleData/'

FileName: 'images_nobin_40x_128x128_8bit.tif'

Metadata:

pixel_size: 0.242 # size of pixel in um

frame_rate: 41.7 #frames per second

Analysis_parameters:

number_lag_times: 40

last_lag_time: 600

binning: no

Fitting_parameters:

model: 'DDM Matrix - Single Exponential'

Tau: [1.0, 0.001, 10]

StretchingExp: [1.0, 0.5, 1.1]

Amplitude: [1e2, 1, 1e6]

Background: [2.5e4, 0, 1e7]

Good_q_range: [5, 20]

Auto_update_good_q_range: True

"""

parameters_as_dictionary = yaml.safe_load(ddm_analysis_parameters_str)

ddm_calc = ddm.DDM_Analysis(parameters_as_dictionary)

[2]:

#The yaml file `example_data_silica_beads.yml` contains the metadata and parameters above

ddm_calc = ddm.DDM_Analysis("../../Examples/example_data_silica_beads.yml")

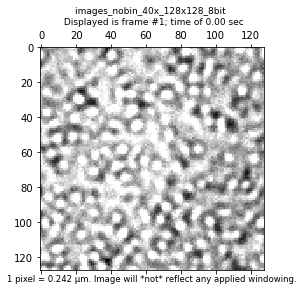

Provided metadata: {'pixel_size': 0.242, 'frame_rate': 41.7}

Image shape: 3000-by-128-by-128

Number of frames to use for analysis: 3000

Maximum lag time (in frames): 600

Number of lag times to compute DDM matrix: 40

Below, with the method calculate_DDM_matrix, we compute the DDM matrix and some associated data. The data will be stored as an xarray Dataset as an attribute to ddm_calc called ddm_dataset.

Note: There are a few optional arguments we can pass to calculate_DDM_matrix. There is an optional argument quiet (True or False, False by default). Then we have some optional keyword arguments (all of which could also be specified in the YAML file). These are: overlap_method which sets the degree of overlap between image pairs when finding all image differences for a each lag time and is either 0, 1, 2, or 3, background_method which sets how to estimate the

background parameter B and is either 0, 1, 2, or 3, and number_lag_times. If any of these three keyword arguments are set here, the value specified in the YAML file will be overwritten.

[3]:

ddm_calc.calculate_DDM_matrix()

2022-02-10 14:00:30,212 - DDM Calculations - Running dt = 1...

2022-02-10 14:00:37,571 - DDM Calculations - Running dt = 5...

2022-02-10 14:00:43,229 - DDM Calculations - Running dt = 9...

2022-02-10 14:00:47,878 - DDM Calculations - Running dt = 16...

2022-02-10 14:00:51,469 - DDM Calculations - Running dt = 27...

2022-02-10 14:00:54,913 - DDM Calculations - Running dt = 47...

2022-02-10 14:00:58,136 - DDM Calculations - Running dt = 81...

2022-02-10 14:01:01,219 - DDM Calculations - Running dt = 138...

2022-02-10 14:01:04,153 - DDM Calculations - Running dt = 236...

2022-02-10 14:01:07,126 - DDM Calculations - Running dt = 402...

DDM matrix took 39.838425159454346 seconds to compute.

Background estimate ± std is 211.17 ± 1.49

[3]:

<xarray.Dataset>

Dimensions: (lagtime: 40, q_y: 128, q_x: 128, q: 64, y: 128, x: 128, frames: 40)

Coordinates:

* lagtime (lagtime) float64 0.02398 0.04796 0.07194 ... 12.59 14.36

framelag (frames) int32 1 2 3 4 5 6 7 ... 308 352 402 459 525 599

* q_y (q_y) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q_x (q_x) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q (q) float64 0.0 0.2028 0.4057 0.6085 ... 12.37 12.58 12.78

* y (y) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

* x (x) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

Dimensions without coordinates: frames

Data variables:

ddm_matrix_full (lagtime, q_y, q_x) float64 194.5 183.5 ... 192.0 196.8

ddm_matrix (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

first_image (y, x) float64 128.0 149.0 173.0 ... 175.0 229.0 215.0

avg_image_ft (q) float64 0.0 1.293e+05 5.225e+03 ... 105.3 104.7 105.3

num_pairs_per_dt (lagtime) int32 2999 2998 2997 1498 1498 ... 20 17 15 13

B float64 211.2

B_std float64 1.491

Amplitude (q) float64 -211.2 2.585e+05 1.024e+04 ... -1.699 -0.52

ISF (lagtime, q) float64 0.0 0.9997 0.9892 ... -4.952 -19.73

Attributes: (12/24)

units: Intensity

lagtime: sec

q: μm$^{-1}$

x: pixels

y: pixels

info: ddm_matrix is the averages of FFT difference ima...

... ...

split_into_4_rois: no

use_windowing_function: no

binning: no

bin_size: 2

central_angle: no

angle_range: no- lagtime: 40

- q_y: 128

- q_x: 128

- q: 64

- y: 128

- x: 128

- frames: 40

- lagtime(lagtime)float640.02398 0.04796 ... 12.59 14.36

array([ 0.023981, 0.047962, 0.071942, 0.095923, 0.119904, 0.143885, 0.167866, 0.191847, 0.215827, 0.263789, 0.28777 , 0.335731, 0.383693, 0.431655, 0.503597, 0.57554 , 0.647482, 0.743405, 0.863309, 0.983213, 1.127098, 1.294964, 1.486811, 1.702638, 1.942446, 2.206235, 2.541966, 2.901679, 3.309353, 3.788969, 4.316547, 4.940048, 5.659472, 6.450839, 7.386091, 8.441247, 9.640288, 11.007194, 12.589928, 14.364508]) - framelag(frames)int321 2 3 4 5 6 ... 352 402 459 525 599

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 11, 12, 14, 16, 18, 21, 24, 27, 31, 36, 41, 47, 54, 62, 71, 81, 92, 106, 121, 138, 158, 180, 206, 236, 269, 308, 352, 402, 459, 525, 599]) - q_y(q_y)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q_x(q_x)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q(q)float640.0 0.2028 0.4057 ... 12.58 12.78

array([ 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - y(y)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127]) - x(x)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127])

- ddm_matrix_full(lagtime, q_y, q_x)float64194.5 183.5 189.9 ... 192.0 196.8

array([[[194.53933642, 183.46865602, 189.88360447, ..., 185.13501835, 189.88360447, 183.46865602], [180.54977527, 184.19554752, 188.62431121, ..., 190.98754022, 197.43847912, 186.62178023], [192.70890797, 195.93098872, 189.09845247, ..., 190.12737145, 189.83523821, 192.21421257], ..., [178.74506797, 186.95925279, 189.9972449 , ..., 190.31796269, 193.86216905, 184.71498966], [192.70890797, 192.21421257, 189.83523821, ..., 194.82373533, 189.09845247, 195.93098872], [180.54977527, 186.62178023, 197.43847912, ..., 189.320714 , 188.62431121, 184.19554752]], [[192.53678443, 184.98536047, 183.00178339, ..., 189.93758386, 183.00178339, 184.98536047], [182.22340665, 190.38208686, 185.90468391, ..., 188.79454507, 188.52514631, 188.48204681], [192.71415943, 196.71513926, 189.84504279, ..., 187.27424593, 187.74393152, 186.99244495], ... [224.60947387, 108.81451286, 189.02957545, ..., 137.44506309, 348.30437843, 228.73579002], [216.96920343, 166.46373793, 174.79469182, ..., 166.69258334, 126.31953212, 140.52349943], [164.20961535, 169.57957548, 133.76022938, ..., 125.77861768, 159.69240297, 247.86589588]], [[ 75.33297495, 174.05006796, 155.35027909, ..., 233.98667431, 155.35027909, 174.05006796], [167.22701098, 196.84765156, 192.02546007, ..., 131.6447673 , 119.41026107, 235.53961919], [132.75085561, 149.1865022 , 144.63052109, ..., 237.29431965, 217.6141243 , 128.83335999], ..., [244.06105601, 195.72999615, 198.70767797, ..., 346.86825864, 208.46454057, 182.9136883 ], [132.75085561, 128.83335999, 217.6141243 , ..., 137.87697986, 144.63052109, 149.1865022 ], [167.22701098, 235.53961919, 119.41026107, ..., 165.83630492, 192.02546007, 196.84765156]]]) - ddm_matrix(lagtime, q)float640.0 294.2 321.4 ... 201.1 200.4

array([[ 0. , 294.18943955, 321.41822078, ..., 192.93331805, 193.46556826, 192.76671589], [ 0. , 432.05714374, 490.9677582 , ..., 194.84772175, 195.06799833, 194.40459328], [ 0. , 535.36374381, 619.95883018, ..., 196.12616285, 196.21094092, 195.79399928], ..., [ 0. , 3966.56056507, 5791.89215548, ..., 205.00017796, 211.36062787, 212.61362392], [ 0. , 4167.1712352 , 5456.14848464, ..., 203.8398704 , 205.38454854, 207.78696548], [ 0. , 3716.77402137, 6067.88205869, ..., 207.76466695, 201.06258553, 200.39635905]]) - first_image(y, x)float64128.0 149.0 173.0 ... 229.0 215.0

array([[128., 149., 173., ..., 178., 224., 255.], [164., 163., 166., ..., 182., 197., 255.], [208., 182., 175., ..., 178., 210., 255.], ..., [147., 162., 162., ..., 182., 196., 165.], [201., 214., 234., ..., 189., 174., 178.], [255., 255., 255., ..., 175., 229., 215.]]) - avg_image_ft(q)float640.0 1.293e+05 ... 104.7 105.3

array([0.00000000e+00, 1.29341509e+05, 5.22511731e+03, 5.86989569e+03, 8.50478146e+03, 9.68446541e+03, 9.99103750e+03, 1.03319622e+04, 1.00264087e+04, 1.08058729e+04, 1.16343568e+04, 1.38669622e+04, 1.68988722e+04, 1.98642184e+04, 2.11800678e+04, 2.14311022e+04, 2.06130601e+04, 1.80258072e+04, 1.42561639e+04, 1.04082328e+04, 7.27537584e+03, 4.68549415e+03, 2.88015028e+03, 1.77658315e+03, 1.06139768e+03, 6.83505113e+02, 4.97908069e+02, 4.18238638e+02, 3.38383278e+02, 2.72050227e+02, 2.35672326e+02, 2.24525405e+02, 2.03430129e+02, 1.78436880e+02, 1.65009527e+02, 1.49291391e+02, 1.41201296e+02, 1.33121051e+02, 1.29764821e+02, 1.28159612e+02, 1.24131175e+02, 1.24052104e+02, 1.21220015e+02, 1.19076951e+02, 1.17509287e+02, 1.15310101e+02, 1.15268069e+02, 1.12572652e+02, 1.11849673e+02, 1.11765243e+02, 1.10247178e+02, 1.09703002e+02, 1.08834403e+02, 1.09662333e+02, 1.08597899e+02, 1.07728419e+02, 1.07789289e+02, 1.06907057e+02, 1.07036538e+02, 1.05981891e+02, 1.05125261e+02, 1.05313067e+02, 1.04737380e+02, 1.05326832e+02]) - num_pairs_per_dt(lagtime)int322999 2998 2997 1498 ... 20 17 15 13

array([2999, 2998, 2997, 1498, 1498, 1497, 998, 998, 997, 748, 747, 598, 498, 497, 426, 372, 331, 270, 247, 212, 185, 164, 140, 123, 109, 94, 81, 71, 63, 54, 47, 41, 35, 31, 27, 23, 20, 17, 15, 13]) - B()float64211.2

array(211.17365621)

- B_std()float641.491

array(1.49105881)

- Amplitude(q)float64-211.2 2.585e+05 ... -1.699 -0.52

array([-2.11173656e+02, 2.58471844e+05, 1.02390610e+04, 1.15286177e+04, 1.67983893e+04, 1.91577572e+04, 1.97709014e+04, 2.04527506e+04, 1.98416438e+04, 2.14005721e+04, 2.30575400e+04, 2.75227507e+04, 3.35865707e+04, 3.95172631e+04, 4.21489620e+04, 4.26510308e+04, 4.10149466e+04, 3.58404407e+04, 2.83011541e+04, 2.06052919e+04, 1.43395780e+04, 9.15981465e+03, 5.54912690e+03, 3.34199265e+03, 1.91162170e+03, 1.15583657e+03, 7.84642482e+02, 6.25303620e+02, 4.65592900e+02, 3.32926798e+02, 2.60170996e+02, 2.37877154e+02, 1.95686602e+02, 1.45700103e+02, 1.18845397e+02, 8.74091262e+01, 7.12289354e+01, 5.50684455e+01, 4.83559858e+01, 4.51455685e+01, 3.70886933e+01, 3.69305527e+01, 3.12663737e+01, 2.69802467e+01, 2.38449170e+01, 1.94465450e+01, 1.93624826e+01, 1.39716478e+01, 1.25256899e+01, 1.23568306e+01, 9.32069947e+00, 8.23234748e+00, 6.49514954e+00, 8.15100953e+00, 6.02214129e+00, 4.28318246e+00, 4.40492236e+00, 2.64045708e+00, 2.89942014e+00, 7.90126460e-01, -9.23134186e-01, -5.47522559e-01, -1.69889697e+00, -5.19992889e-01]) - ISF(lagtime, q)float640.0 0.9997 0.9892 ... -4.952 -19.73

array([[ 0. , 0.99967882, 0.98923294, ..., -32.31431348, -9.42328539, -34.39844623], [ 0. , 0.99914543, 0.97267385, ..., -28.81782979, -8.48006746, -31.24863895], [ 0. , 0.99874574, 0.96007591, ..., -26.48287376, -7.80731178, -28.57666779], ..., [ 0. , 0.98547081, 0.45495798, ..., -10.27529479, 1.11005474, 3.76920654], [ 0. , 0.98469467, 0.48774845, ..., -12.39449068, -2.40756842, -5.51295585], [ 0. , 0.98643721, 0.42800337, ..., -5.22620786, -4.95155025, -19.7258549 ]])

- units :

- Intensity

- lagtime :

- sec

- q :

- μm$^{-1}$

- x :

- pixels

- y :

- pixels

- info :

- ddm_matrix is the averages of FFT difference images, ravs are the radial averages

- BackgroundMethod :

- 0

- OverlapMethod :

- 2

- DataDirectory :

- C:/Users/rmcgorty/Documents/GitHub/PyDDM/Examples/

- FileName :

- images_nobin_40x_128x128_8bit.tif

- pixel_size :

- 0.242

- frame_rate :

- 41.7

- starting_frame_number :

- no

- ending_frame_number :

- no

- number_lagtimes :

- 40

- first_lag_time :

- yes

- last_lag_time :

- 600

- crop_to_roi :

- no

- split_into_4_rois :

- no

- use_windowing_function :

- no

- binning :

- no

- bin_size :

- 2

- central_angle :

- no

- angle_range :

- no

Digging into the DDM xarray.Dataset#

Let’s look at the dataset created after running calculate_DDM_matrix.

[4]:

display(ddm_calc.ddm_dataset)

<xarray.Dataset>

Dimensions: (lagtime: 40, q_y: 128, q_x: 128, q: 64, y: 128, x: 128, frames: 40)

Coordinates:

* lagtime (lagtime) float64 0.02398 0.04796 0.07194 ... 12.59 14.36

framelag (frames) int32 1 2 3 4 5 6 7 ... 308 352 402 459 525 599

* q_y (q_y) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q_x (q_x) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q (q) float64 0.0 0.2028 0.4057 0.6085 ... 12.37 12.58 12.78

* y (y) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

* x (x) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

Dimensions without coordinates: frames

Data variables:

ddm_matrix_full (lagtime, q_y, q_x) float64 194.5 183.5 ... 192.0 196.8

ddm_matrix (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

first_image (y, x) float64 128.0 149.0 173.0 ... 175.0 229.0 215.0

avg_image_ft (q) float64 0.0 1.293e+05 5.225e+03 ... 105.3 104.7 105.3

num_pairs_per_dt (lagtime) int32 2999 2998 2997 1498 1498 ... 20 17 15 13

B float64 211.2

B_std float64 1.491

Amplitude (q) float64 -211.2 2.585e+05 1.024e+04 ... -1.699 -0.52

ISF (lagtime, q) float64 0.0 0.9997 0.9892 ... -4.952 -19.73

Attributes: (12/24)

units: Intensity

lagtime: sec

q: μm$^{-1}$

x: pixels

y: pixels

info: ddm_matrix is the averages of FFT difference ima...

... ...

split_into_4_rois: no

use_windowing_function: no

binning: no

bin_size: 2

central_angle: no

angle_range: no- lagtime: 40

- q_y: 128

- q_x: 128

- q: 64

- y: 128

- x: 128

- frames: 40

- lagtime(lagtime)float640.02398 0.04796 ... 12.59 14.36

array([ 0.023981, 0.047962, 0.071942, 0.095923, 0.119904, 0.143885, 0.167866, 0.191847, 0.215827, 0.263789, 0.28777 , 0.335731, 0.383693, 0.431655, 0.503597, 0.57554 , 0.647482, 0.743405, 0.863309, 0.983213, 1.127098, 1.294964, 1.486811, 1.702638, 1.942446, 2.206235, 2.541966, 2.901679, 3.309353, 3.788969, 4.316547, 4.940048, 5.659472, 6.450839, 7.386091, 8.441247, 9.640288, 11.007194, 12.589928, 14.364508]) - framelag(frames)int321 2 3 4 5 6 ... 352 402 459 525 599

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 11, 12, 14, 16, 18, 21, 24, 27, 31, 36, 41, 47, 54, 62, 71, 81, 92, 106, 121, 138, 158, 180, 206, 236, 269, 308, 352, 402, 459, 525, 599]) - q_y(q_y)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q_x(q_x)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q(q)float640.0 0.2028 0.4057 ... 12.58 12.78

array([ 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - y(y)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127]) - x(x)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127])

- ddm_matrix_full(lagtime, q_y, q_x)float64194.5 183.5 189.9 ... 192.0 196.8

array([[[194.53933642, 183.46865602, 189.88360447, ..., 185.13501835, 189.88360447, 183.46865602], [180.54977527, 184.19554752, 188.62431121, ..., 190.98754022, 197.43847912, 186.62178023], [192.70890797, 195.93098872, 189.09845247, ..., 190.12737145, 189.83523821, 192.21421257], ..., [178.74506797, 186.95925279, 189.9972449 , ..., 190.31796269, 193.86216905, 184.71498966], [192.70890797, 192.21421257, 189.83523821, ..., 194.82373533, 189.09845247, 195.93098872], [180.54977527, 186.62178023, 197.43847912, ..., 189.320714 , 188.62431121, 184.19554752]], [[192.53678443, 184.98536047, 183.00178339, ..., 189.93758386, 183.00178339, 184.98536047], [182.22340665, 190.38208686, 185.90468391, ..., 188.79454507, 188.52514631, 188.48204681], [192.71415943, 196.71513926, 189.84504279, ..., 187.27424593, 187.74393152, 186.99244495], ... [224.60947387, 108.81451286, 189.02957545, ..., 137.44506309, 348.30437843, 228.73579002], [216.96920343, 166.46373793, 174.79469182, ..., 166.69258334, 126.31953212, 140.52349943], [164.20961535, 169.57957548, 133.76022938, ..., 125.77861768, 159.69240297, 247.86589588]], [[ 75.33297495, 174.05006796, 155.35027909, ..., 233.98667431, 155.35027909, 174.05006796], [167.22701098, 196.84765156, 192.02546007, ..., 131.6447673 , 119.41026107, 235.53961919], [132.75085561, 149.1865022 , 144.63052109, ..., 237.29431965, 217.6141243 , 128.83335999], ..., [244.06105601, 195.72999615, 198.70767797, ..., 346.86825864, 208.46454057, 182.9136883 ], [132.75085561, 128.83335999, 217.6141243 , ..., 137.87697986, 144.63052109, 149.1865022 ], [167.22701098, 235.53961919, 119.41026107, ..., 165.83630492, 192.02546007, 196.84765156]]]) - ddm_matrix(lagtime, q)float640.0 294.2 321.4 ... 201.1 200.4

array([[ 0. , 294.18943955, 321.41822078, ..., 192.93331805, 193.46556826, 192.76671589], [ 0. , 432.05714374, 490.9677582 , ..., 194.84772175, 195.06799833, 194.40459328], [ 0. , 535.36374381, 619.95883018, ..., 196.12616285, 196.21094092, 195.79399928], ..., [ 0. , 3966.56056507, 5791.89215548, ..., 205.00017796, 211.36062787, 212.61362392], [ 0. , 4167.1712352 , 5456.14848464, ..., 203.8398704 , 205.38454854, 207.78696548], [ 0. , 3716.77402137, 6067.88205869, ..., 207.76466695, 201.06258553, 200.39635905]]) - first_image(y, x)float64128.0 149.0 173.0 ... 229.0 215.0

array([[128., 149., 173., ..., 178., 224., 255.], [164., 163., 166., ..., 182., 197., 255.], [208., 182., 175., ..., 178., 210., 255.], ..., [147., 162., 162., ..., 182., 196., 165.], [201., 214., 234., ..., 189., 174., 178.], [255., 255., 255., ..., 175., 229., 215.]]) - avg_image_ft(q)float640.0 1.293e+05 ... 104.7 105.3

array([0.00000000e+00, 1.29341509e+05, 5.22511731e+03, 5.86989569e+03, 8.50478146e+03, 9.68446541e+03, 9.99103750e+03, 1.03319622e+04, 1.00264087e+04, 1.08058729e+04, 1.16343568e+04, 1.38669622e+04, 1.68988722e+04, 1.98642184e+04, 2.11800678e+04, 2.14311022e+04, 2.06130601e+04, 1.80258072e+04, 1.42561639e+04, 1.04082328e+04, 7.27537584e+03, 4.68549415e+03, 2.88015028e+03, 1.77658315e+03, 1.06139768e+03, 6.83505113e+02, 4.97908069e+02, 4.18238638e+02, 3.38383278e+02, 2.72050227e+02, 2.35672326e+02, 2.24525405e+02, 2.03430129e+02, 1.78436880e+02, 1.65009527e+02, 1.49291391e+02, 1.41201296e+02, 1.33121051e+02, 1.29764821e+02, 1.28159612e+02, 1.24131175e+02, 1.24052104e+02, 1.21220015e+02, 1.19076951e+02, 1.17509287e+02, 1.15310101e+02, 1.15268069e+02, 1.12572652e+02, 1.11849673e+02, 1.11765243e+02, 1.10247178e+02, 1.09703002e+02, 1.08834403e+02, 1.09662333e+02, 1.08597899e+02, 1.07728419e+02, 1.07789289e+02, 1.06907057e+02, 1.07036538e+02, 1.05981891e+02, 1.05125261e+02, 1.05313067e+02, 1.04737380e+02, 1.05326832e+02]) - num_pairs_per_dt(lagtime)int322999 2998 2997 1498 ... 20 17 15 13

array([2999, 2998, 2997, 1498, 1498, 1497, 998, 998, 997, 748, 747, 598, 498, 497, 426, 372, 331, 270, 247, 212, 185, 164, 140, 123, 109, 94, 81, 71, 63, 54, 47, 41, 35, 31, 27, 23, 20, 17, 15, 13]) - B()float64211.2

array(211.17365621)

- B_std()float641.491

array(1.49105881)

- Amplitude(q)float64-211.2 2.585e+05 ... -1.699 -0.52

array([-2.11173656e+02, 2.58471844e+05, 1.02390610e+04, 1.15286177e+04, 1.67983893e+04, 1.91577572e+04, 1.97709014e+04, 2.04527506e+04, 1.98416438e+04, 2.14005721e+04, 2.30575400e+04, 2.75227507e+04, 3.35865707e+04, 3.95172631e+04, 4.21489620e+04, 4.26510308e+04, 4.10149466e+04, 3.58404407e+04, 2.83011541e+04, 2.06052919e+04, 1.43395780e+04, 9.15981465e+03, 5.54912690e+03, 3.34199265e+03, 1.91162170e+03, 1.15583657e+03, 7.84642482e+02, 6.25303620e+02, 4.65592900e+02, 3.32926798e+02, 2.60170996e+02, 2.37877154e+02, 1.95686602e+02, 1.45700103e+02, 1.18845397e+02, 8.74091262e+01, 7.12289354e+01, 5.50684455e+01, 4.83559858e+01, 4.51455685e+01, 3.70886933e+01, 3.69305527e+01, 3.12663737e+01, 2.69802467e+01, 2.38449170e+01, 1.94465450e+01, 1.93624826e+01, 1.39716478e+01, 1.25256899e+01, 1.23568306e+01, 9.32069947e+00, 8.23234748e+00, 6.49514954e+00, 8.15100953e+00, 6.02214129e+00, 4.28318246e+00, 4.40492236e+00, 2.64045708e+00, 2.89942014e+00, 7.90126460e-01, -9.23134186e-01, -5.47522559e-01, -1.69889697e+00, -5.19992889e-01]) - ISF(lagtime, q)float640.0 0.9997 0.9892 ... -4.952 -19.73

array([[ 0. , 0.99967882, 0.98923294, ..., -32.31431348, -9.42328539, -34.39844623], [ 0. , 0.99914543, 0.97267385, ..., -28.81782979, -8.48006746, -31.24863895], [ 0. , 0.99874574, 0.96007591, ..., -26.48287376, -7.80731178, -28.57666779], ..., [ 0. , 0.98547081, 0.45495798, ..., -10.27529479, 1.11005474, 3.76920654], [ 0. , 0.98469467, 0.48774845, ..., -12.39449068, -2.40756842, -5.51295585], [ 0. , 0.98643721, 0.42800337, ..., -5.22620786, -4.95155025, -19.7258549 ]])

- units :

- Intensity

- lagtime :

- sec

- q :

- μm$^{-1}$

- x :

- pixels

- y :

- pixels

- info :

- ddm_matrix is the averages of FFT difference images, ravs are the radial averages

- BackgroundMethod :

- 0

- OverlapMethod :

- 2

- DataDirectory :

- C:/Users/rmcgorty/Documents/GitHub/PyDDM/Examples/

- FileName :

- images_nobin_40x_128x128_8bit.tif

- pixel_size :

- 0.242

- frame_rate :

- 41.7

- starting_frame_number :

- no

- ending_frame_number :

- no

- number_lagtimes :

- 40

- first_lag_time :

- yes

- last_lag_time :

- 600

- crop_to_roi :

- no

- split_into_4_rois :

- no

- use_windowing_function :

- no

- binning :

- no

- bin_size :

- 2

- central_angle :

- no

- angle_range :

- no

[5]:

print("Notice the data variables: \n", ddm_calc.ddm_dataset.data_vars)

Notice the data variables:

Data variables:

ddm_matrix_full (lagtime, q_y, q_x) float64 194.5 183.5 ... 192.0 196.8

ddm_matrix (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

first_image (y, x) float64 128.0 149.0 173.0 ... 175.0 229.0 215.0

avg_image_ft (q) float64 0.0 1.293e+05 5.225e+03 ... 105.3 104.7 105.3

num_pairs_per_dt (lagtime) int32 2999 2998 2997 1498 1498 ... 20 17 15 13

B float64 211.2

B_std float64 1.491

Amplitude (q) float64 -211.2 2.585e+05 1.024e+04 ... -1.699 -0.52

ISF (lagtime, q) float64 0.0 0.9997 0.9892 ... -4.952 -19.73

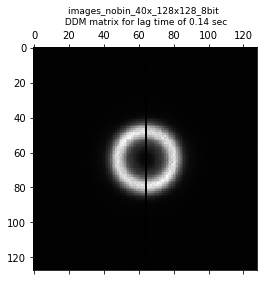

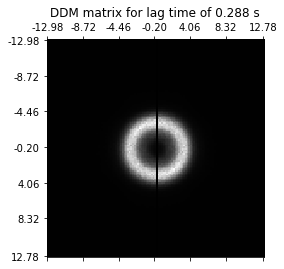

Within this ddm_datset, we have the DDM matrix \(D(q_x, q_y, \Delta t)\) stored as ddm_matrix_full. We can take a look at a slice of this matrix with the matshow function in matplotlib.

[6]:

###############################################################

# Displaying a slice of the full three-dimensional DDM matrix #

###############################################################

lagtime_for_ddmmatrix = 10

fig,ax = plt.subplots()

plt.matshow(ddm_calc.ddm_dataset.ddm_matrix_full[lagtime_for_ddmmatrix], fignum=0, cmap='gray')

#Setting up the ticks and tick labels so that they match q_x and q_y

ticks = np.linspace(0,ddm_calc.ddm_dataset.ddm_matrix_full.shape[1]-1,7,dtype=int)

ticklabelsx = ["{:4.2f}".format(i) for i in ddm_calc.ddm_dataset.q_x[ticks].values]

ticklabelsy = ["{:4.2f}".format(i) for i in ddm_calc.ddm_dataset.q_y[ticks].values]

ax.set_xticks(ticks)

ax.set_xticklabels(ticklabelsx)

ax.set_yticks(ticks)

ax.set_yticklabels(ticklabelsy)

plt.title("DDM matrix for lag time of %.3f s" % ddm_calc.ddm_dataset.lagtime[10])

[6]:

Text(0.5, 1.0, 'DDM matrix for lag time of 0.288 s')

The slice of the DDM matrix above looks pretty radially symmetric. This is expected as we are analyzing a video of silica particles diffusing randomly with no preferred direction. Because of this symmetry, we can radially average the DDM matrix to go from \(D(q_x, q_y, \Delta t)\) to \(D(q, \Delta t)\) where \(q = \sqrt{q_x^2 + q_y^2}\).

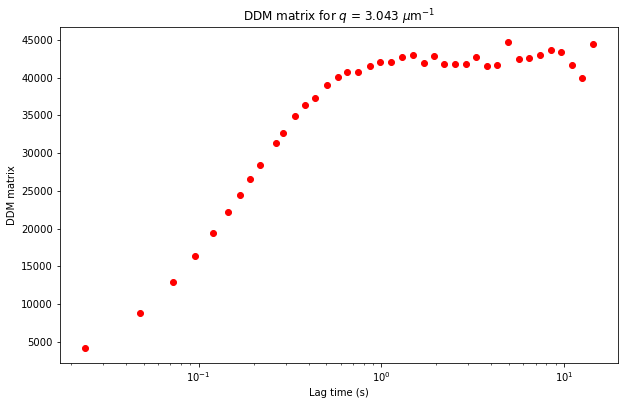

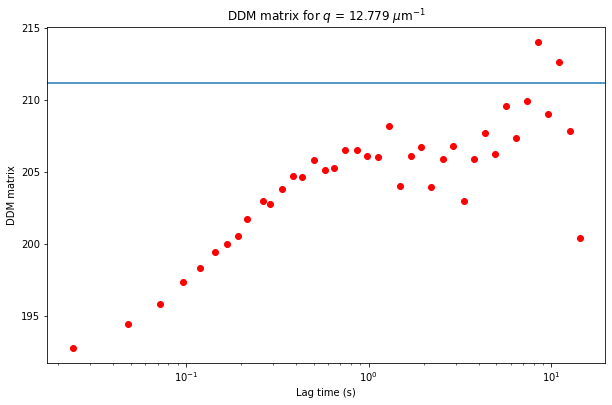

Let’s plot a slice of this radially averaged DDM matrix \(D(q, \Delta t)\) (which is stored as the variable ddm_matrix in the ddm_dataset).

[7]:

######################################################################

# Plotting the radially averaged DDM matrix for a particular q-value #

######################################################################

q_index = 15 #index of the list of q values

plt.figure(figsize=(10,10/1.618))

plt.semilogx(ddm_calc.ddm_dataset.lagtime, ddm_calc.ddm_dataset.ddm_matrix[:,q_index], 'ro')

plt.xlabel("Lag time (s)")

plt.ylabel("DDM matrix")

plt.title("DDM matrix for $q$ = %.3f $\mu$m$^{-1}$" % ddm_calc.ddm_dataset.q[q_index]);

Different data selecting methods#

Since we are slicing this xarray dataset, let’s use the opportunity to go over different ways of selecting data stored in a dataset. See the xarray user guide for more info.

We can use the

iselfunction (see xarray.DataArray.isel).

q_index = 15 #index of the list of q values

plt.figure(figsize=(10,10/1.618))

plt.semilogx(ddm_calc.ddm_dataset.lagtime, ddm_calc.ddm_dataset.ddm_matrix.isel(q=q_index), 'ro')

plt.xlabel("Lag time (s)")

plt.ylabel("DDM matrix")

plt.title("DDM matrix for q = %.3f " % ddm_calc.ddm_dataset.q[q_index])

We can select the desired q not based on its index but on its actual value with

sel(see xarray.DataArray.sel. Note that here we have to usemethod = 'nearest'since we do not have a q value of exactly 3 \(\mu\)m\(^{-1}\).

q_index = 15 #index of the list of q values

plt.figure(figsize=(10,10/1.618))

plt.semilogx(ddm_calc.ddm_dataset.lagtime, ddm_calc.ddm_dataset.ddm_matrix.sel(q=3, method='nearest'), 'ro')

plt.xlabel("Lag time (s)")

plt.ylabel("DDM matrix")

plt.title("DDM matrix for q = %.3f " % ddm_calc.ddm_dataset.q[q_index])

Lastly, we can create this plot (using whichever slicing method) with xarray’s plotting function (which just wraps

matplotlib.pyplot). For more, see xarray.DataArray.plot.

q_index = 15

ddm_calc.ddm_dataset.ddm_matrix.isel(q=q_index).plot(xscale='log', marker='o', ls='none')

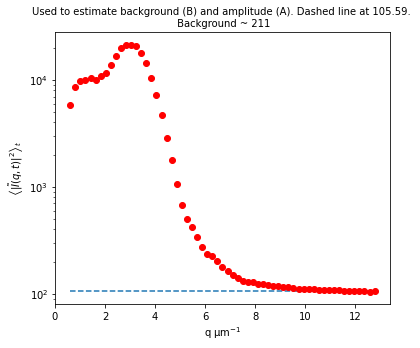

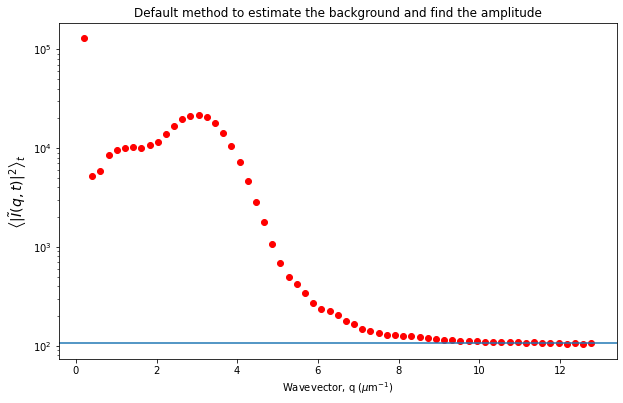

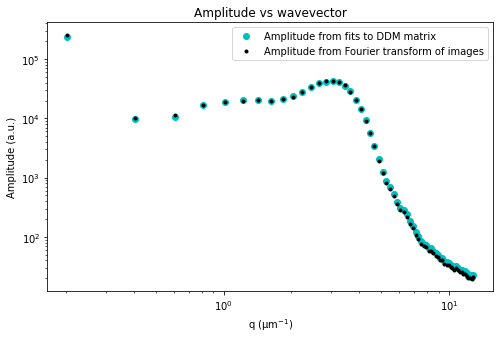

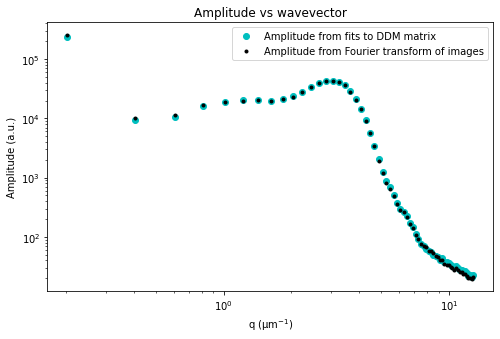

Determing the A and B parameters to get the ISF#

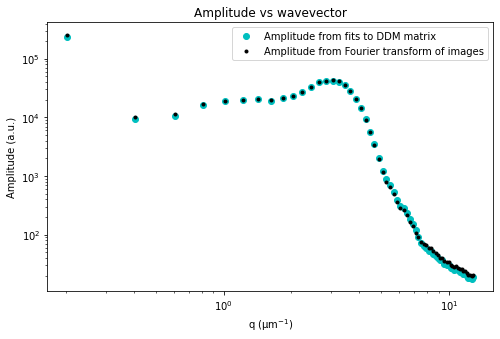

Let’s now look at the avg_image_ft data variable. This is a radially averaged average of all the Fourier transforms of the images (i.e., the radially average of \(\left< | \tilde{I}(q, t) |^2 \right>_t\)). This function should equal $ (1/2) \times `:nbsphinx-math:left[ A(q) + B(q) right] `$.

If we make the assumption that at the highest wavevectors (large \(q\)) the amplitude, \(A(q)\), goes to zero, then we can estimate the background, \(B\). If we also assume that the background is a constant that’s independent of \(q\), then we can determine \(A(q)\).

Plotted below is this avg_image_ft along with a horizontal line which can be used to estimate half the background. This estimate is taken from the last 10% of the wavevectors. The background can be saved to the B data variable. And then we can determine the amplitude which is saved as the Amplitude variable.

Note: What is described above is the default method for getting the background. Other methods can be selected with the optional parameter ‘background_method’ passed to the method ‘calculate_DDM_matrix’.

[8]:

############################################################################################

# Plotting the average squared Fourier-transformed image as a function of the wavevector q #

############################################################################################

plt.figure(figsize=(10,10/1.618))

plt.semilogy(ddm_calc.ddm_dataset.q[1:], ddm_calc.ddm_dataset.avg_image_ft[1:], 'ro')

plt.xlabel("Wavevector, q ($\mu$m$^{-1}$)")

plt.ylabel(r"$\left< | \tilde{I}(q, t) |^2 \right>_t$", fontsize=14)

plt.title("Default method to estimate the background and find the amplitude")

number_of_hi_qs = int(0.1*len(ddm_calc.ddm_dataset.q)) #for getting highest 10% of q's

plt.axhline(y = ddm_calc.ddm_dataset.avg_image_ft[-1*number_of_hi_qs:].mean())

[8]:

<matplotlib.lines.Line2D at 0x2299c86dbb0>

The other methods for getting the background (and from the background then estimating the amplitude) are:

background_method= 1: \(B\) will be the minimum of the DDM matrixbackground_method= 2: \(B\) will be the average (over all lag times) of the DDM matrix at the largest wavevector \(q\)background_method= 3: \(B\) = 0

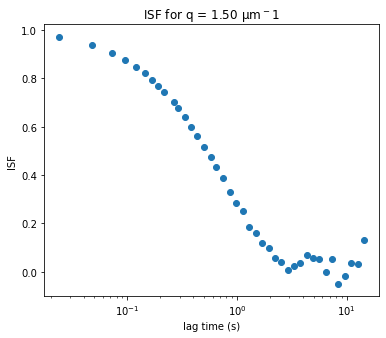

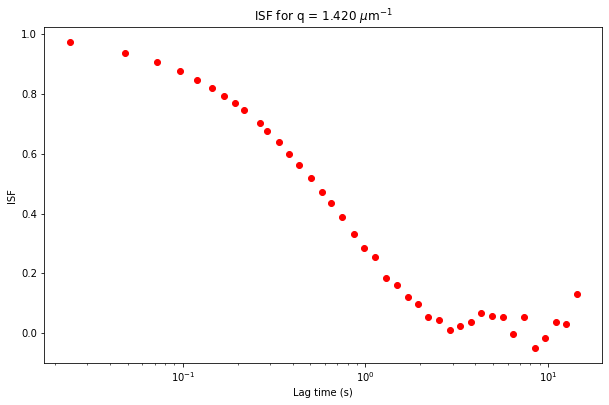

If we know \(A(q)\) and \(B\), then we can find the intermediate scattering function, \(f(q, \Delta t)\), by assuming that the DDM matrix is equal to \(D (q, \Delta t) = A(q) \times \left[ 1 - f(q, \Delta t) \right] + B\).

The ISF is stored as the data variable ISF in the ddm_dataset.

[9]:

#############################################################################################################

# Plotting the intermediate scattering function (ISF) for a particular value of q as a function of lag time #

#############################################################################################################

plt.figure(figsize=(10,10/1.618))

plt.semilogx(ddm_calc.ddm_dataset.lagtime, ddm_calc.ddm_dataset.ISF.sel(q=1.5, method='nearest'), 'ro')

plt.xlabel("Lag time (s)")

plt.ylabel("ISF")

plt.title("ISF for q = %.3f $\mu$m$^{-1}$" % ddm_calc.ddm_dataset.q.sel(q=1.5, method='nearest'));

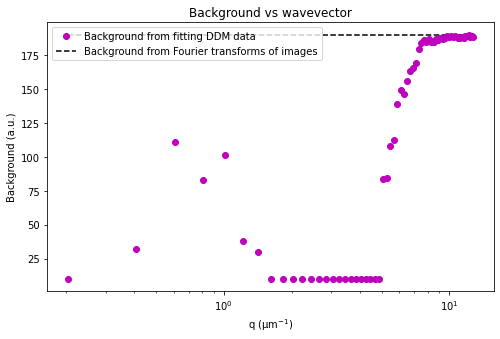

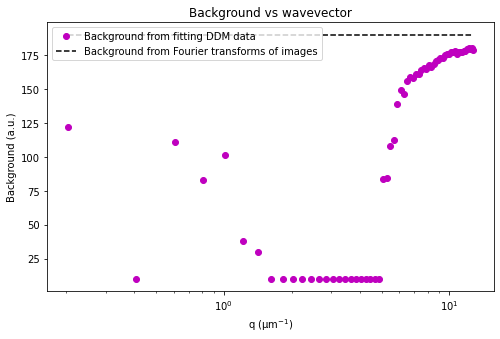

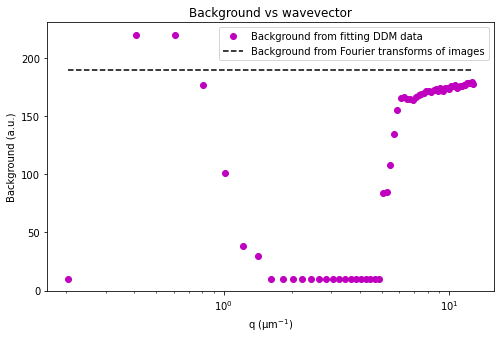

Checking the background estimate#

We expect the ISF to go from 1 to 0. We may not see exactly this behavior in the ISF for all wavevectors. At larger wavevectors, the dynamics might be fast and we might not be taking images fast enough to see the early decay of the ISF. Conversely, at lower wavevectors, the dynamics might be slow and we may not be able to calculate the ISF for large enough lag times to see it decay all the way to zero. Additionally, for non-ergodic dynamics, the ISF may not decay to zero but to some value sometimes referred to as the non-ergodicity parameter.

But another reason that the ISF may not go from 1 to 0 is that we did a poor job in estimating the background, \(B\). Using the default method (background_method = 0), we try to estimate \(B\) by looking at the average Fourier transforms of all the images, radially averaging that, and assuming that the amplitude goes to zero at large wavevectors. But another way to estimate \(B\) is to look at the DDM matrix at large wavevectors, as is plotted below.

[10]:

###################################################################################################

# Plotting the radially averaged DDM matrix for the greatest value of q as a function of lag time #

###################################################################################################

q_index = -1 #index of the list of q values

plt.figure(figsize=(10,10/1.618))

plt.semilogx(ddm_calc.ddm_dataset.lagtime, ddm_calc.ddm_dataset.ddm_matrix[:,q_index], 'ro')

plt.xlabel("Lag time (s)")

plt.ylabel("DDM matrix")

plt.title("DDM matrix for $q$ = %.3f $\mu$m$^{-1}$" % ddm_calc.ddm_dataset.q[q_index]);

plt.axhline(y = ddm_calc.ddm_dataset.B)

[10]:

<matplotlib.lines.Line2D at 0x229a6721f70>

The DDM matrix should go from the background, \(B\), to the background plus amplitude, \(B + A\), as a function of the lag time. From the above plot, it does look like we overestimated the background (perhaps the amplitude hadn’t gone quite to zero by the last wavevectors we measure?). From the plot, clearly the background is less than 195.

Let’s estimate the background at about 190. We can make this adjustment with the recalculate_ISF_with_new_background function.

[11]:

new_ddm_matrix = ddm.recalculate_ISF_with_new_background(ddm_calc.ddm_dataset, background_val=190)

display(new_ddm_matrix)

<xarray.Dataset>

Dimensions: (lagtime: 40, q_y: 128, q_x: 128, q: 64, y: 128, x: 128, frames: 40)

Coordinates:

* lagtime (lagtime) float64 0.02398 0.04796 0.07194 ... 12.59 14.36

framelag (frames) int32 1 2 3 4 5 6 7 ... 308 352 402 459 525 599

* q_y (q_y) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q_x (q_x) float64 -12.98 -12.78 -12.58 ... 12.37 12.58 12.78

* q (q) float64 0.0 0.2028 0.4057 0.6085 ... 12.37 12.58 12.78

* y (y) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

* x (x) int32 0 1 2 3 4 5 6 7 ... 121 122 123 124 125 126 127

Dimensions without coordinates: frames

Data variables:

ddm_matrix_full (lagtime, q_y, q_x) float64 194.5 183.5 ... 192.0 196.8

ddm_matrix (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

first_image (y, x) float64 128.0 149.0 173.0 ... 175.0 229.0 215.0

avg_image_ft (q) float64 0.0 1.293e+05 5.225e+03 ... 105.3 104.7 105.3

num_pairs_per_dt (lagtime) int32 2999 2998 2997 1498 1498 ... 20 17 15 13

B int32 190

B_std float64 1.491

Amplitude (q) float64 -190.0 2.585e+05 1.026e+04 ... 19.47 20.65

ISF (lagtime, q) float64 0.0 0.9996 0.9872 ... 0.432 0.4966

Attributes: (12/24)

units: Intensity

lagtime: sec

q: μm$^{-1}$

x: pixels

y: pixels

info: ddm_matrix is the averages of FFT difference ima...

... ...

split_into_4_rois: no

use_windowing_function: no

binning: no

bin_size: 2

central_angle: no

angle_range: no- lagtime: 40

- q_y: 128

- q_x: 128

- q: 64

- y: 128

- x: 128

- frames: 40

- lagtime(lagtime)float640.02398 0.04796 ... 12.59 14.36

array([ 0.023981, 0.047962, 0.071942, 0.095923, 0.119904, 0.143885, 0.167866, 0.191847, 0.215827, 0.263789, 0.28777 , 0.335731, 0.383693, 0.431655, 0.503597, 0.57554 , 0.647482, 0.743405, 0.863309, 0.983213, 1.127098, 1.294964, 1.486811, 1.702638, 1.942446, 2.206235, 2.541966, 2.901679, 3.309353, 3.788969, 4.316547, 4.940048, 5.659472, 6.450839, 7.386091, 8.441247, 9.640288, 11.007194, 12.589928, 14.364508]) - framelag(frames)int321 2 3 4 5 6 ... 352 402 459 525 599

array([ 1, 2, 3, 4, 5, 6, 7, 8, 9, 11, 12, 14, 16, 18, 21, 24, 27, 31, 36, 41, 47, 54, 62, 71, 81, 92, 106, 121, 138, 158, 180, 206, 236, 269, 308, 352, 402, 459, 525, 599]) - q_y(q_y)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q_x(q_x)float64-12.98 -12.78 ... 12.58 12.78

array([-12.981788, -12.778947, -12.576107, -12.373267, -12.170426, -11.967586, -11.764745, -11.561905, -11.359064, -11.156224, -10.953383, -10.750543, -10.547703, -10.344862, -10.142022, -9.939181, -9.736341, -9.5335 , -9.33066 , -9.12782 , -8.924979, -8.722139, -8.519298, -8.316458, -8.113617, -7.910777, -7.707937, -7.505096, -7.302256, -7.099415, -6.896575, -6.693734, -6.490894, -6.288053, -6.085213, -5.882373, -5.679532, -5.476692, -5.273851, -5.071011, -4.86817 , -4.66533 , -4.46249 , -4.259649, -4.056809, -3.853968, -3.651128, -3.448287, -3.245447, -3.042607, -2.839766, -2.636926, -2.434085, -2.231245, -2.028404, -1.825564, -1.622723, -1.419883, -1.217043, -1.014202, -0.811362, -0.608521, -0.405681, -0.20284 , 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - q(q)float640.0 0.2028 0.4057 ... 12.58 12.78

array([ 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - y(y)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127]) - x(x)int320 1 2 3 4 5 ... 123 124 125 126 127

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127])

- ddm_matrix_full(lagtime, q_y, q_x)float64194.5 183.5 189.9 ... 192.0 196.8

array([[[194.53933642, 183.46865602, 189.88360447, ..., 185.13501835, 189.88360447, 183.46865602], [180.54977527, 184.19554752, 188.62431121, ..., 190.98754022, 197.43847912, 186.62178023], [192.70890797, 195.93098872, 189.09845247, ..., 190.12737145, 189.83523821, 192.21421257], ..., [178.74506797, 186.95925279, 189.9972449 , ..., 190.31796269, 193.86216905, 184.71498966], [192.70890797, 192.21421257, 189.83523821, ..., 194.82373533, 189.09845247, 195.93098872], [180.54977527, 186.62178023, 197.43847912, ..., 189.320714 , 188.62431121, 184.19554752]], [[192.53678443, 184.98536047, 183.00178339, ..., 189.93758386, 183.00178339, 184.98536047], [182.22340665, 190.38208686, 185.90468391, ..., 188.79454507, 188.52514631, 188.48204681], [192.71415943, 196.71513926, 189.84504279, ..., 187.27424593, 187.74393152, 186.99244495], ... [224.60947387, 108.81451286, 189.02957545, ..., 137.44506309, 348.30437843, 228.73579002], [216.96920343, 166.46373793, 174.79469182, ..., 166.69258334, 126.31953212, 140.52349943], [164.20961535, 169.57957548, 133.76022938, ..., 125.77861768, 159.69240297, 247.86589588]], [[ 75.33297495, 174.05006796, 155.35027909, ..., 233.98667431, 155.35027909, 174.05006796], [167.22701098, 196.84765156, 192.02546007, ..., 131.6447673 , 119.41026107, 235.53961919], [132.75085561, 149.1865022 , 144.63052109, ..., 237.29431965, 217.6141243 , 128.83335999], ..., [244.06105601, 195.72999615, 198.70767797, ..., 346.86825864, 208.46454057, 182.9136883 ], [132.75085561, 128.83335999, 217.6141243 , ..., 137.87697986, 144.63052109, 149.1865022 ], [167.22701098, 235.53961919, 119.41026107, ..., 165.83630492, 192.02546007, 196.84765156]]]) - ddm_matrix(lagtime, q)float640.0 294.2 321.4 ... 201.1 200.4

array([[ 0. , 294.18943955, 321.41822078, ..., 192.93331805, 193.46556826, 192.76671589], [ 0. , 432.05714374, 490.9677582 , ..., 194.84772175, 195.06799833, 194.40459328], [ 0. , 535.36374381, 619.95883018, ..., 196.12616285, 196.21094092, 195.79399928], ..., [ 0. , 3966.56056507, 5791.89215548, ..., 205.00017796, 211.36062787, 212.61362392], [ 0. , 4167.1712352 , 5456.14848464, ..., 203.8398704 , 205.38454854, 207.78696548], [ 0. , 3716.77402137, 6067.88205869, ..., 207.76466695, 201.06258553, 200.39635905]]) - first_image(y, x)float64128.0 149.0 173.0 ... 229.0 215.0

array([[128., 149., 173., ..., 178., 224., 255.], [164., 163., 166., ..., 182., 197., 255.], [208., 182., 175., ..., 178., 210., 255.], ..., [147., 162., 162., ..., 182., 196., 165.], [201., 214., 234., ..., 189., 174., 178.], [255., 255., 255., ..., 175., 229., 215.]]) - avg_image_ft(q)float640.0 1.293e+05 ... 104.7 105.3

array([0.00000000e+00, 1.29341509e+05, 5.22511731e+03, 5.86989569e+03, 8.50478146e+03, 9.68446541e+03, 9.99103750e+03, 1.03319622e+04, 1.00264087e+04, 1.08058729e+04, 1.16343568e+04, 1.38669622e+04, 1.68988722e+04, 1.98642184e+04, 2.11800678e+04, 2.14311022e+04, 2.06130601e+04, 1.80258072e+04, 1.42561639e+04, 1.04082328e+04, 7.27537584e+03, 4.68549415e+03, 2.88015028e+03, 1.77658315e+03, 1.06139768e+03, 6.83505113e+02, 4.97908069e+02, 4.18238638e+02, 3.38383278e+02, 2.72050227e+02, 2.35672326e+02, 2.24525405e+02, 2.03430129e+02, 1.78436880e+02, 1.65009527e+02, 1.49291391e+02, 1.41201296e+02, 1.33121051e+02, 1.29764821e+02, 1.28159612e+02, 1.24131175e+02, 1.24052104e+02, 1.21220015e+02, 1.19076951e+02, 1.17509287e+02, 1.15310101e+02, 1.15268069e+02, 1.12572652e+02, 1.11849673e+02, 1.11765243e+02, 1.10247178e+02, 1.09703002e+02, 1.08834403e+02, 1.09662333e+02, 1.08597899e+02, 1.07728419e+02, 1.07789289e+02, 1.06907057e+02, 1.07036538e+02, 1.05981891e+02, 1.05125261e+02, 1.05313067e+02, 1.04737380e+02, 1.05326832e+02]) - num_pairs_per_dt(lagtime)int322999 2998 2997 1498 ... 20 17 15 13

array([2999, 2998, 2997, 1498, 1498, 1497, 998, 998, 997, 748, 747, 598, 498, 497, 426, 372, 331, 270, 247, 212, 185, 164, 140, 123, 109, 94, 81, 71, 63, 54, 47, 41, 35, 31, 27, 23, 20, 17, 15, 13]) - B()int32190

array(190)

- B_std()float641.491

array(1.49105881)

- Amplitude(q)float64-190.0 2.585e+05 ... 19.47 20.65

array([-1.90000000e+02, 2.58493018e+05, 1.02602346e+04, 1.15497914e+04, 1.68195629e+04, 1.91789308e+04, 1.97920750e+04, 2.04739243e+04, 1.98628174e+04, 2.14217457e+04, 2.30787136e+04, 2.75439244e+04, 3.36077443e+04, 3.95384367e+04, 4.21701356e+04, 4.26722045e+04, 4.10361202e+04, 3.58616144e+04, 2.83223278e+04, 2.06264656e+04, 1.43607517e+04, 9.18098831e+03, 5.57030056e+03, 3.36316630e+03, 1.93279536e+03, 1.17701023e+03, 8.05816138e+02, 6.46477276e+02, 4.86766556e+02, 3.54100454e+02, 2.81344652e+02, 2.59050811e+02, 2.16860258e+02, 1.66873759e+02, 1.40019053e+02, 1.08582782e+02, 9.24025916e+01, 7.62421017e+01, 6.95296420e+01, 6.63192247e+01, 5.82623495e+01, 5.81042089e+01, 5.24400299e+01, 4.81539029e+01, 4.50185732e+01, 4.06202012e+01, 4.05361388e+01, 3.51453041e+01, 3.36993461e+01, 3.35304868e+01, 3.04943557e+01, 2.94060037e+01, 2.76688057e+01, 2.93246657e+01, 2.71957975e+01, 2.54568387e+01, 2.55785786e+01, 2.38141133e+01, 2.40730764e+01, 2.19637827e+01, 2.02505220e+01, 2.06261336e+01, 1.94747592e+01, 2.06536633e+01]) - ISF(lagtime, q)float640.0 0.9996 0.9872 ... 0.432 0.4966

array([[ 0. , 0.99959694, 0.9871915 , ..., 0.85778634, 0.82204821, 0.86604236], [ 0. , 0.99906358, 0.97066658, ..., 0.76497186, 0.7397658 , 0.78674034], [ 0. , 0.99866393, 0.95809464, ..., 0.70299025, 0.6810774 , 0.71946869], ..., [ 0. , 0.98539009, 0.4540191 , ..., 0.27275862, -0.09683656, -0.09489651], [ 0. , 0.98461401, 0.4867419 , ..., 0.32901286, 0.21002625, 0.13879852], [ 0. , 0.98635641, 0.42712011, ..., 0.13873015, 0.43195264, 0.49663365]])

- units :

- Intensity

- lagtime :

- sec

- q :

- μm$^{-1}$

- x :

- pixels

- y :

- pixels

- info :

- ddm_matrix is the averages of FFT difference images, ravs are the radial averages

- BackgroundMethod :

- None

- OverlapMethod :

- 2

- DataDirectory :

- C:/Users/rmcgorty/Documents/GitHub/PyDDM/Examples/

- FileName :

- images_nobin_40x_128x128_8bit.tif

- pixel_size :

- 0.242

- frame_rate :

- 41.7

- starting_frame_number :

- no

- ending_frame_number :

- no

- number_lagtimes :

- 40

- first_lag_time :

- yes

- last_lag_time :

- 600

- crop_to_roi :

- no

- split_into_4_rois :

- no

- use_windowing_function :

- no

- binning :

- no

- bin_size :

- 2

- central_angle :

- no

- angle_range :

- no

Now let’s save this ddm_dataset. When the calculate_DDM_matrix method is executed, the ddm_dataset is automatically saved to disk. But we can overwrite that with the resave_ddm_dataset method.

[12]:

ddm_calc.resave_ddm_dataset(new_ddm_matrix)

Additional notes about the background#

The default way that this code estimates the background is to compute the power spectrum of the images, as described above. That is, we find \(\left< | \tilde{I}(q, t) |^2 \right>_t\) and recognize that \(\left< | \tilde{I}(q, t) |^2 \right>_t = 0.5 \times \left[ A(q) + B \right]\) and assume that as \(q \rightarrow q_{max}\), \(A(q) \rightarrow 0\). Therefore, \(\left< | \tilde{I}(q_{max}, t) |^2 \right>_t \approx 0.5\times B\) (see [Cerbino 2017] or Eq. 5 in [Giavazzi 2018]).

But, there are other ways. In [Kurzthaler 2018], they set the background, \(B\), to zero. They note that for their fluorescence imaging “the camera noise is usually negligible.” However, they note that for bright-field of phase contrast modalities, the camera is not negligible and \(B\) will be non-zero.

In [Bayles 2017], they approximate \(B\) by \(\min \left[ D(q, \Delta t_{min}) \right]\). But they note that the frame rate needs to be “sufficiently small” and that this will give an overestimate of \(B\). To try this, use background_method = 1 as an optional keyword parameter in the function calculate_DDM_matrix (or set the value of background_method in the YAML file under Analysis_parameters).

[Cerbino 2017] Cerbino, R., Piotti, D., Buscaglia, M. & Giavazzi, F. Dark field differential dynamic microscopy enables accurate characterization of the roto-translational dynamics of bacteria and colloidal clusters. J. Phys.: Condens. Matter 30, 025901 (2017).

[Giavazzi 2018] Giavazzi, F., Malinverno, C., Scita, G. & Cerbino, R. Tracking-Free Determination of Single-Cell Displacements and Division Rates in Confluent Monolayers. Front. Phys. 6, (2018).

[Kurzthaler 2018] Kurzthaler, C. et al. Probing the Spatiotemporal Dynamics of Catalytic Janus Particles with Single-Particle Tracking and Differential Dynamic Microscopy. Phys. Rev. Lett. 121, 078001 (2018).

[Bayles 2017] Bayles, A. V., Squires, T. M. & Helgeson, M. E. Probe microrheology without particle tracking by differential dynamic microscopy. Rheol Acta 56, 863–869 (2017).

Initiazing DDM_Fit class and fitting our data to a model#

We initlize the DDM_Fit class by passing it the yaml file containing our analysis parameters.

[13]:

#Note that to initialize the DDM_Fit class we need to pass it the YAML file containing the parameters

# *or* a dictionary with the same parameters.

ddm_fit = ddm.DDM_Fit(ddm_calc.data_yaml)

| Initial guess | Minimum | Maximum | |

|---|---|---|---|

| Amplitude | 100.0 | 1.000 | 1000000.0 |

| Tau | 1.0 | 0.001 | 10.0 |

| Background | 25000.0 | 0.000 | 10000000.0 |

| StretchingExp | 1.0 | 0.500 | 1.1 |

Loading file C:/Users/rmcgorty/Documents/GitHub/PyDDM/Examples/images_nobin_40x_128x128_8bit_ddmmatrix.nc ...

Based on what was done above in terms of estimating the background, we found that \(B\) should be about 190. We use that estimate of the background to determine \(A(q)\) and the intermediate scattering function, \(f(q,\Delta t)\). We could proceed to then fit the ISF. But here we choose not to. We fit the DDM matrix and have the amplitude and background, \(A\) and \(B\), be fitting parameters. But we can constrain these parameters based on what we found above.

[14]:

#We update the intial guess for the background and the lower and upper bounds

ddm_fit.set_parameter_initial_guess('Background', 190)

ddm_fit.set_parameter_bounds('Background', [10, 220])

Parameter 'Background' set to 190.

Parameter 'Background' lower limit set to 10.

Parameter 'Background' upper limit set to 220.

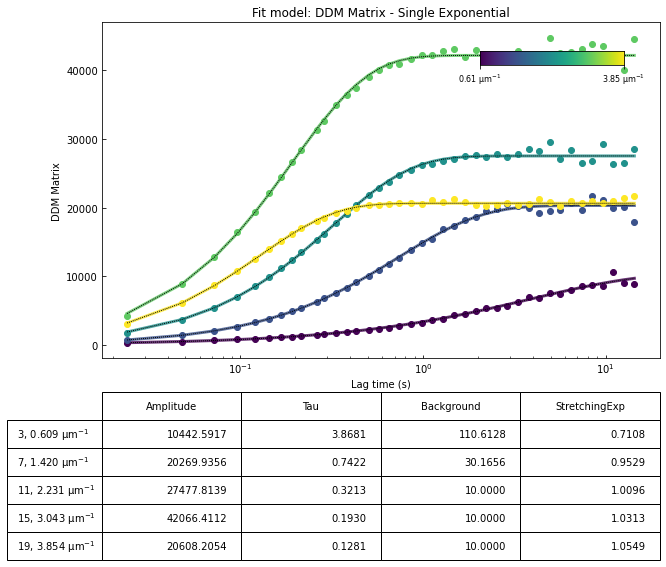

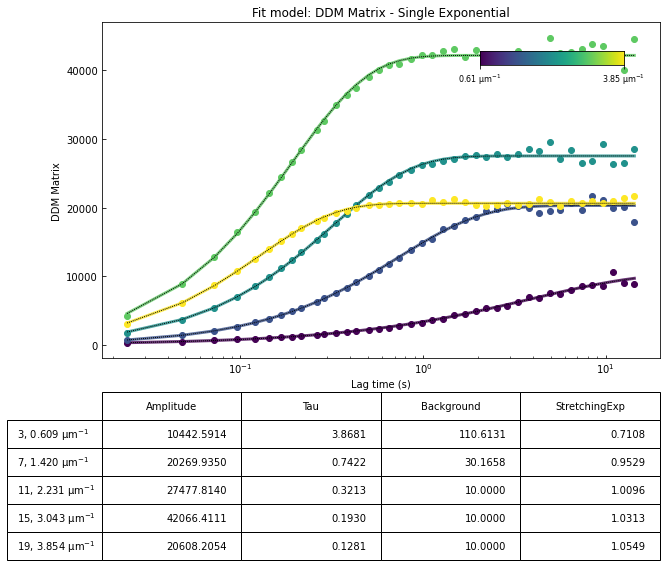

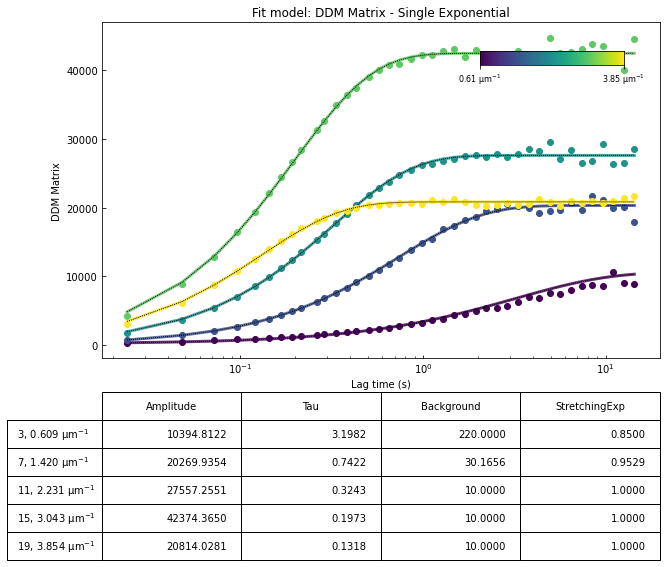

Our first attemp at fitting the data#

When we execute the fit below, we can use the optional argument use_A_from_images_as_guess set to True. This will use the value of \(A(q)\) we esimated from the average Fourier tranform of all images and the estimated background. We can further limit the bounds on \(A(q)\) with the optional parameter update_limits_on_A set to True. This will restrict the range that \(A(q)\) can take to be within 10% of the estimated \(A(q)\). If we want the bounds to be stricter or looser

than 10%, we can change the optional parameter updated_lims_on_A_fraction to be something other than 0.1 (the default).

[15]:

#Note that the method `fit` has many optional parameters

fit01 = ddm_fit.fit(name_fit = 'fit01', display_table=False, use_A_from_images_as_guess=True, update_limits_on_A=True)

In function 'get_tau_vs_q_fit', using new tau...

Fit is saved in fittings dictionary with key 'fit01'.

[16]:

#Generate plots for inspecting the outcome of the fits

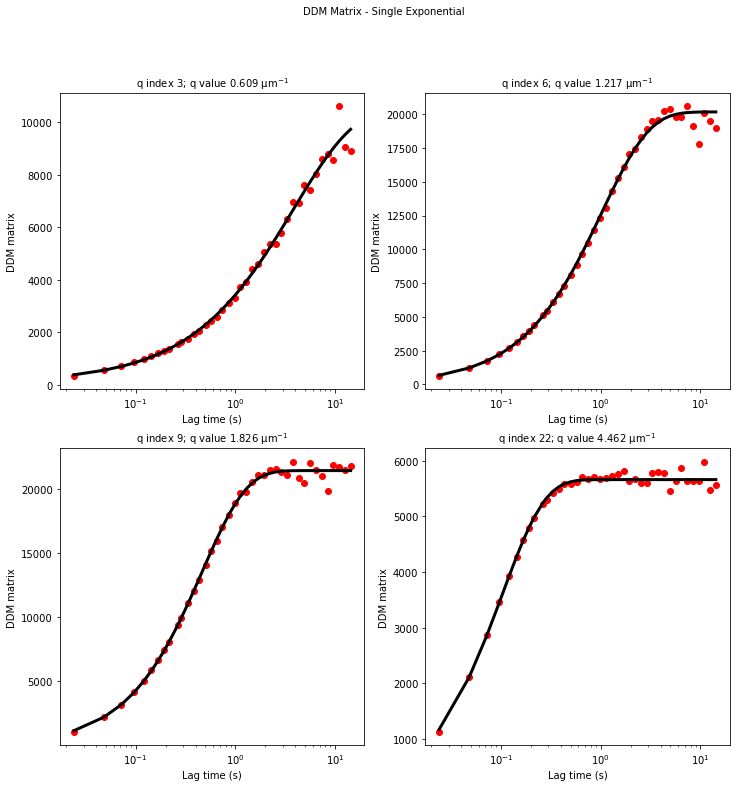

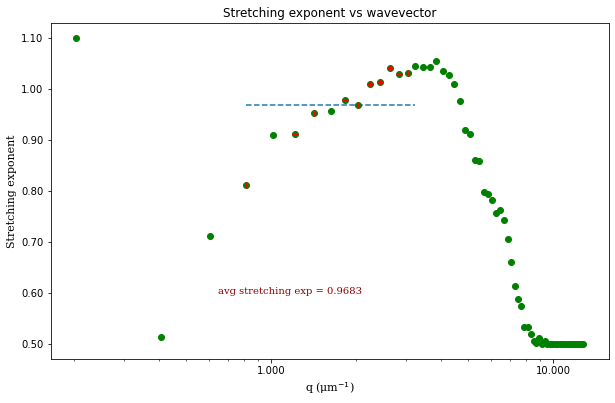

ddm.fit_report(fit01, q_indices=[3,6,9,22], forced_qs=[4,16], use_new_tau=True, show=True)

In function 'get_tau_vs_q_fit', using new tau...

In hf.plot_one_tau_vs_q function, using new tau...

[16]:

<xarray.Dataset>

Dimensions: (parameter: 4, q: 64, lagtime: 40)

Coordinates:

* parameter (parameter) <U13 'Amplitude' 'Tau' ... 'StretchingExp'

* q (q) float64 0.0 0.2028 0.4057 0.6085 ... 12.37 12.58 12.78

* lagtime (lagtime) float64 0.02398 0.04796 0.07194 ... 12.59 14.36

Data variables:

parameters (parameter, q) float64 1.0 2.326e+05 ... 0.705 0.7446

theory (lagtime, q) float64 10.0 315.0 338.5 ... 206.7 206.8 206.9

isf_data (lagtime, q) float64 0.0 0.9996 0.9872 ... 0.432 0.4966

ddm_matrix_data (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

A (q) float64 -190.0 2.585e+05 1.026e+04 ... 19.47 20.65

B int32 190

Attributes: (12/18)

model: DDM Matrix - Single Exponential

data_to_use: DDM Matrix

initial_params_dict: ["{'n': 0, 'value': 100.0, 'limits': [1.0...

effective_diffusion_coeff: 0.6168642315520588

tau_vs_q_slope: [-1.98005917]

msd_alpha: [1.01007082]

... ...

DataDirectory: C:/Users/rmcgorty/Documents/GitHub/PyDDM/...

FileName: images_nobin_40x_128x128_8bit.tif

pixel_size: 0.242

frame_rate: 41.7

BackgroundMethod: None

OverlapMethod: 2- parameter: 4

- q: 64

- lagtime: 40

- parameter(parameter)<U13'Amplitude' ... 'StretchingExp'

array(['Amplitude', 'Tau', 'Background', 'StretchingExp'], dtype='<U13')

- q(q)float640.0 0.2028 0.4057 ... 12.58 12.78

array([ 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - lagtime(lagtime)float640.02398 0.04796 ... 12.59 14.36

array([ 0.023981, 0.047962, 0.071942, 0.095923, 0.119904, 0.143885, 0.167866, 0.191847, 0.215827, 0.263789, 0.28777 , 0.335731, 0.383693, 0.431655, 0.503597, 0.57554 , 0.647482, 0.743405, 0.863309, 0.983213, 1.127098, 1.294964, 1.486811, 1.702638, 1.942446, 2.206235, 2.541966, 2.901679, 3.309353, 3.788969, 4.316547, 4.940048, 5.659472, 6.450839, 7.386091, 8.441247, 9.640288, 11.007194, 12.589928, 14.364508])

- parameters(parameter, q)float641.0 2.326e+05 ... 0.705 0.7446

array([[1.00000000e+00, 2.32643716e+05, 9.23421116e+03, 1.04425917e+04, 1.65958488e+04, 1.87741627e+04, 2.01262093e+04, 2.02699356e+04, 1.93938141e+04, 2.14281346e+04, 2.33743051e+04, 2.74778139e+04, 3.34522708e+04, 3.95131276e+04, 4.17820421e+04, 4.20664112e+04, 4.07569060e+04, 3.57098235e+04, 2.84201318e+04, 2.06082054e+04, 1.43591503e+04, 9.28277724e+03, 5.65119988e+03, 3.47725293e+03, 2.06716941e+03, 1.22311564e+03, 8.76927421e+02, 6.90124480e+02, 5.35443212e+02, 3.89510499e+02, 3.09479117e+02, 2.84955892e+02, 2.38546284e+02, 1.83561135e+02, 1.54020959e+02, 1.19441061e+02, 9.04713776e+01, 7.32803000e+01, 6.33150620e+01, 5.96873022e+01, 5.24361145e+01, 5.22937881e+01, 4.71960269e+01, 4.33385126e+01, 4.05167159e+01, 3.65581811e+01, 3.64825249e+01, 3.16307736e+01, 3.03294115e+01, 3.01774381e+01, 2.74449201e+01, 2.64654033e+01, 2.49019252e+01, 2.63921992e+01, 2.44762177e+01, 2.29111548e+01, 2.30207207e+01, 2.14327020e+01, 2.16657687e+01, 1.97674044e+01, 1.82254698e+01, 1.85635203e+01, 1.75272833e+01, 1.85882970e+01], [1.00000000e+01, 1.00000000e+01, 1.00000000e+01, 3.86806763e+00, 2.55606871e+00, 1.42436450e+00, 1.03553610e+00, 7.42218975e-01, 5.52015441e-01, 4.65091456e-01, 3.96220641e-01, 3.21261104e-01, 2.90633158e-01, 2.48487749e-01, 2.13902731e-01, 1.92959729e-01, ... 1.88872932e+02, 1.88581861e+02, 1.89252080e+02, 1.88386083e+02, 1.88981350e+02, 1.87924484e+02, 1.87679912e+02, 1.87688222e+02, 1.87969231e+02, 1.87747866e+02, 1.88648024e+02, 1.89106434e+02, 1.89427805e+02, 1.88135686e+02, 1.89288727e+02, 1.88358949e+02], [1.10000000e+00, 1.10000000e+00, 5.61916398e-01, 7.10762250e-01, 8.11577778e-01, 9.09132560e-01, 9.11750595e-01, 9.52912598e-01, 9.57434030e-01, 9.79312824e-01, 9.69590565e-01, 1.00961966e+00, 1.01501443e+00, 1.04072569e+00, 1.03076437e+00, 1.03128893e+00, 1.04638759e+00, 1.04446796e+00, 1.04406777e+00, 1.05492850e+00, 1.03622512e+00, 1.02767912e+00, 1.01091421e+00, 9.75980332e-01, 9.19591679e-01, 9.11770097e-01, 8.60156028e-01, 8.59201102e-01, 7.98604397e-01, 7.94550993e-01, 7.81941075e-01, 7.57373189e-01, 7.63314218e-01, 7.33617919e-01, 6.92531764e-01, 6.47957554e-01, 6.96849842e-01, 7.07400255e-01, 7.43780117e-01, 6.79390232e-01, 7.06264342e-01, 6.76778617e-01, 6.50957583e-01, 6.69603282e-01, 6.90556765e-01, 6.62360386e-01, 7.05250808e-01, 6.76426769e-01, 7.21412072e-01, 7.22245349e-01, 6.46001050e-01, 6.55461922e-01, 6.71431019e-01, 6.24491328e-01, 6.21572597e-01, 6.59109603e-01, 6.24070701e-01, 6.10418310e-01, 6.90625300e-01, 6.72248157e-01, 7.68715009e-01, 7.20353863e-01, 7.04971605e-01, 7.44597078e-01]]) - theory(lagtime, q)float6410.0 315.0 338.5 ... 206.8 206.9

array([[1.00013109e+01, 3.14969814e+02, 3.38493932e+02, ..., 1.92529023e+02, 1.93101914e+02, 1.92287259e+02], [1.00028078e+01, 6.63227156e+02, 4.80661006e+02, ..., 1.94802592e+02, 1.95066214e+02, 1.94459478e+02], [1.00043826e+01, 1.02958077e+03, 5.91814578e+02, ..., 1.96469200e+02, 1.96522549e+02, 1.96093000e+02], ..., [1.06708801e+01, 1.56086044e+05, 6.05266368e+03, ..., 2.06699206e+02, 2.06816010e+02, 2.06947246e+02], [1.07242679e+01, 1.68506368e+05, 6.30794233e+03, ..., 2.06699206e+02, 2.06816010e+02, 2.06947246e+02], [1.07744986e+01, 1.80192244e+05, 6.55592511e+03, ..., 2.06699206e+02, 2.06816010e+02, 2.06947246e+02]]) - isf_data(lagtime, q)float640.0 0.9996 0.9872 ... 0.432 0.4966

array([[ 0. , 0.99959694, 0.9871915 , ..., 0.85778634, 0.82204821, 0.86604236], [ 0. , 0.99906358, 0.97066658, ..., 0.76497186, 0.7397658 , 0.78674034], [ 0. , 0.99866393, 0.95809464, ..., 0.70299025, 0.6810774 , 0.71946869], ..., [ 0. , 0.98539009, 0.4540191 , ..., 0.27275862, -0.09683656, -0.09489651], [ 0. , 0.98461401, 0.4867419 , ..., 0.32901286, 0.21002625, 0.13879852], [ 0. , 0.98635641, 0.42712011, ..., 0.13873015, 0.43195264, 0.49663365]]) - ddm_matrix_data(lagtime, q)float640.0 294.2 321.4 ... 201.1 200.4

array([[ 0. , 294.18943955, 321.41822078, ..., 192.93331805, 193.46556826, 192.76671589], [ 0. , 432.05714374, 490.9677582 , ..., 194.84772175, 195.06799833, 194.40459328], [ 0. , 535.36374381, 619.95883018, ..., 196.12616285, 196.21094092, 195.79399928], ..., [ 0. , 3966.56056507, 5791.89215548, ..., 205.00017796, 211.36062787, 212.61362392], [ 0. , 4167.1712352 , 5456.14848464, ..., 203.8398704 , 205.38454854, 207.78696548], [ 0. , 3716.77402137, 6067.88205869, ..., 207.76466695, 201.06258553, 200.39635905]]) - A(q)float64-190.0 2.585e+05 ... 19.47 20.65

array([-1.90000000e+02, 2.58493018e+05, 1.02602346e+04, 1.15497914e+04, 1.68195629e+04, 1.91789308e+04, 1.97920750e+04, 2.04739243e+04, 1.98628174e+04, 2.14217457e+04, 2.30787136e+04, 2.75439244e+04, 3.36077443e+04, 3.95384367e+04, 4.21701356e+04, 4.26722045e+04, 4.10361202e+04, 3.58616144e+04, 2.83223278e+04, 2.06264656e+04, 1.43607517e+04, 9.18098831e+03, 5.57030056e+03, 3.36316630e+03, 1.93279536e+03, 1.17701023e+03, 8.05816138e+02, 6.46477276e+02, 4.86766556e+02, 3.54100454e+02, 2.81344652e+02, 2.59050811e+02, 2.16860258e+02, 1.66873759e+02, 1.40019053e+02, 1.08582782e+02, 9.24025916e+01, 7.62421017e+01, 6.95296420e+01, 6.63192247e+01, 5.82623495e+01, 5.81042089e+01, 5.24400299e+01, 4.81539029e+01, 4.50185732e+01, 4.06202012e+01, 4.05361388e+01, 3.51453041e+01, 3.36993461e+01, 3.35304868e+01, 3.04943557e+01, 2.94060037e+01, 2.76688057e+01, 2.93246657e+01, 2.71957975e+01, 2.54568387e+01, 2.55785786e+01, 2.38141133e+01, 2.40730764e+01, 2.19637827e+01, 2.02505220e+01, 2.06261336e+01, 1.94747592e+01, 2.06536633e+01]) - B()int32190

array(190)

- model :

- DDM Matrix - Single Exponential

- data_to_use :

- DDM Matrix

- initial_params_dict :

- ["{'n': 0, 'value': 100.0, 'limits': [1.0, 1000000.0], 'limited': [True, True], 'fixed': False, 'parname': 'Amplitude', 'error': 0, 'step': 0}", "{'n': 1, 'value': 1.0, 'limits': [0.001, 10.0], 'limited': [True, True], 'fixed': False, 'parname': 'Tau', 'error': 0, 'step': 0}", "{'n': 2, 'value': 190.0, 'limits': [10.0, 220.0], 'limited': [True, True], 'fixed': False, 'parname': 'Background', 'error': 0, 'step': 0}", "{'n': 3, 'value': 1.0, 'limits': [0.5, 1.1], 'limited': [True, True], 'fixed': False, 'parname': 'StretchingExp', 'error': 0, 'step': 0}"]

- effective_diffusion_coeff :

- 0.6168642315520588

- tau_vs_q_slope :

- [-1.98005917]

- msd_alpha :

- [1.01007082]

- msd_effective_diffusion_coeff :

- [0.6138703]

- diffusion_coeff :

- 0.6104877159595499

- diffusion_coeff_std :

- 0.04076205720351696

- velocity :

- 1.0541545094734308

- velocity_std :

- 0.41611807499452924

- good_q_range :

- [4, 16]

- DataDirectory :

- C:/Users/rmcgorty/Documents/GitHub/PyDDM/Examples/

- FileName :

- images_nobin_40x_128x128_8bit.tif

- pixel_size :

- 0.242

- frame_rate :

- 41.7

- BackgroundMethod :

- None

- OverlapMethod :

- 2

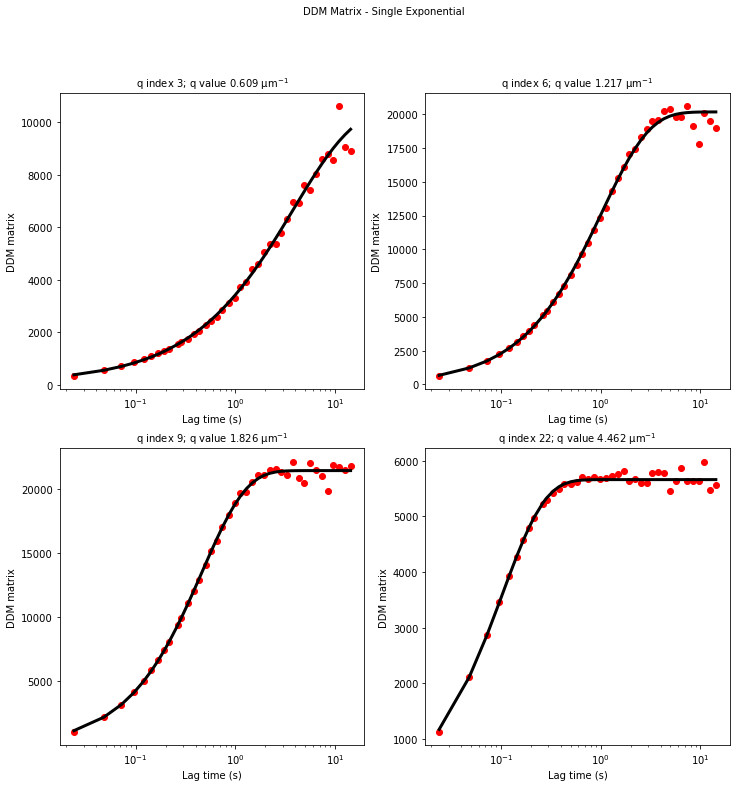

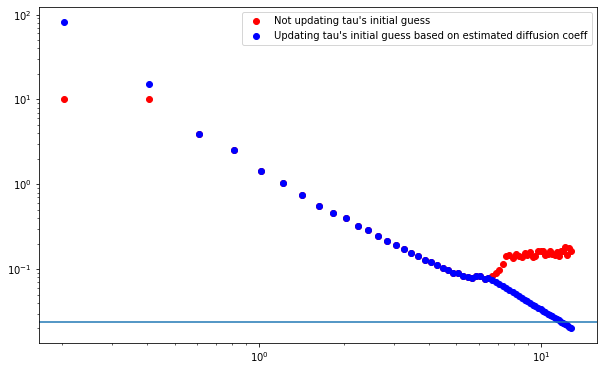

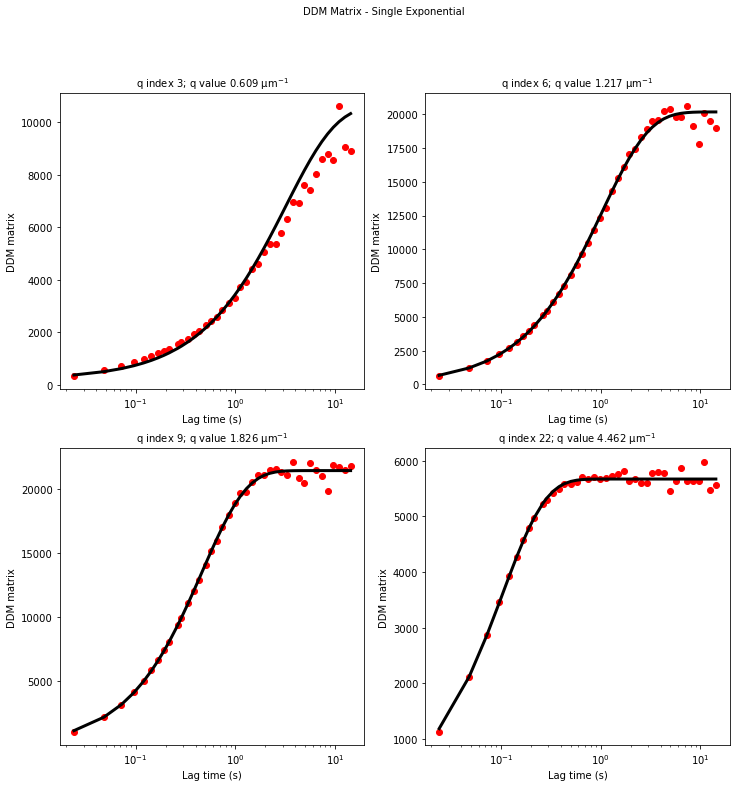

Our second attemp at fitting the data - updating initial guesses for tau#

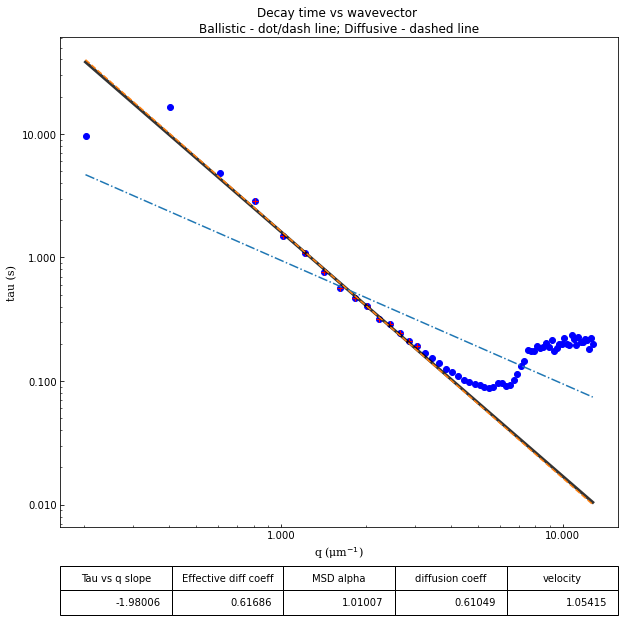

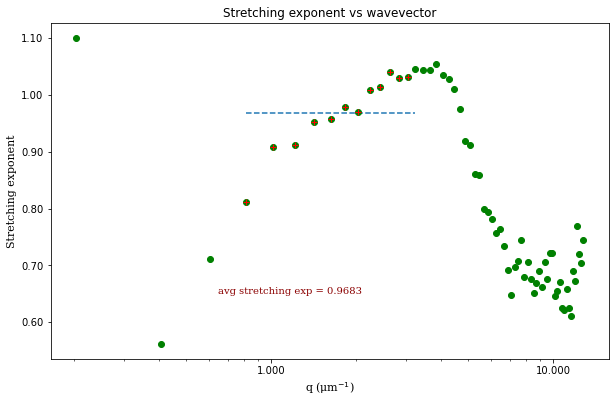

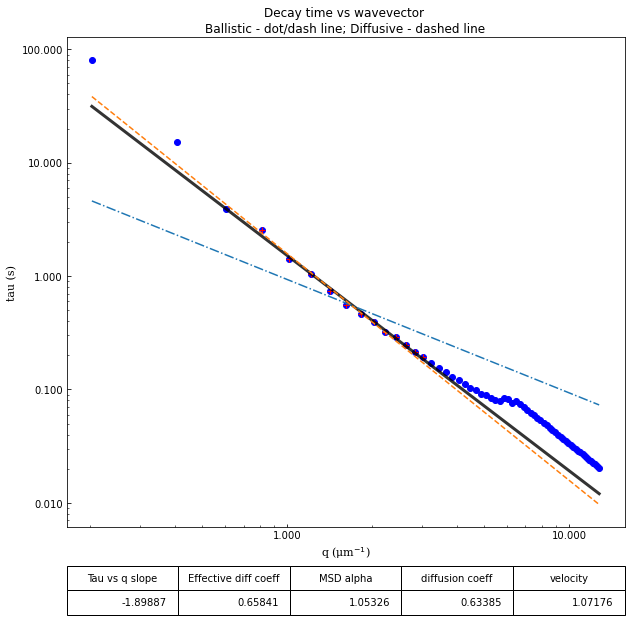

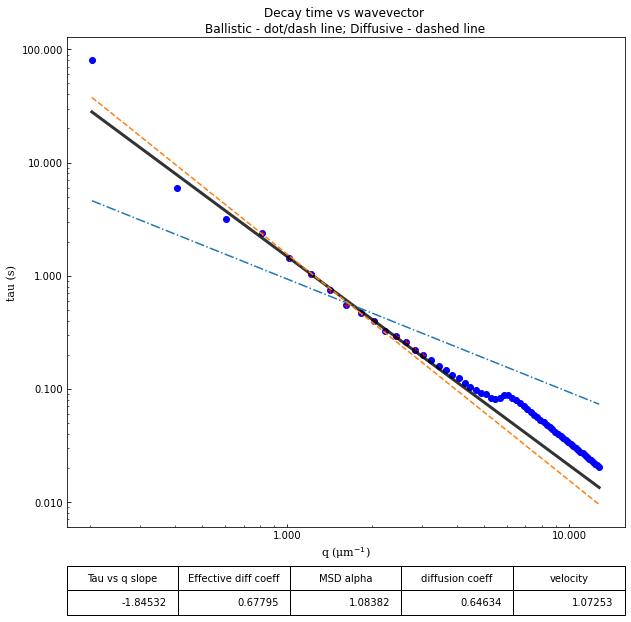

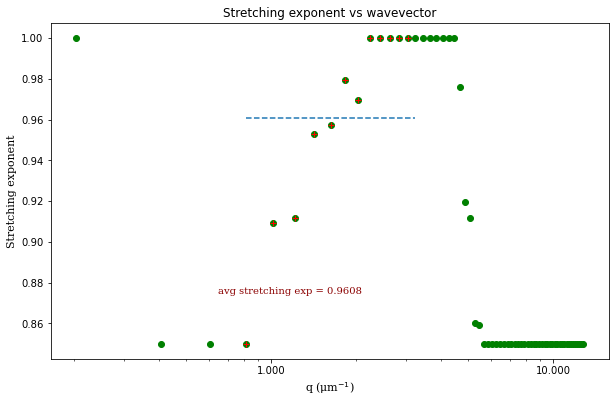

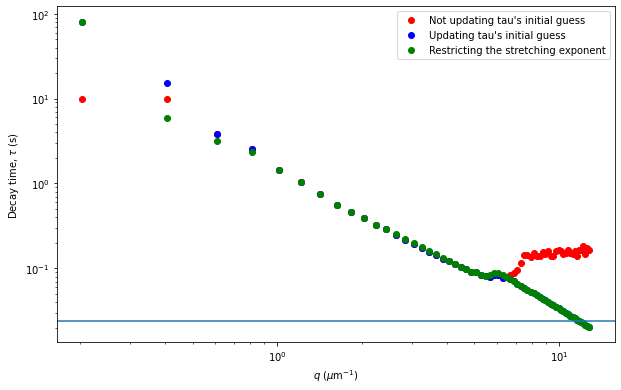

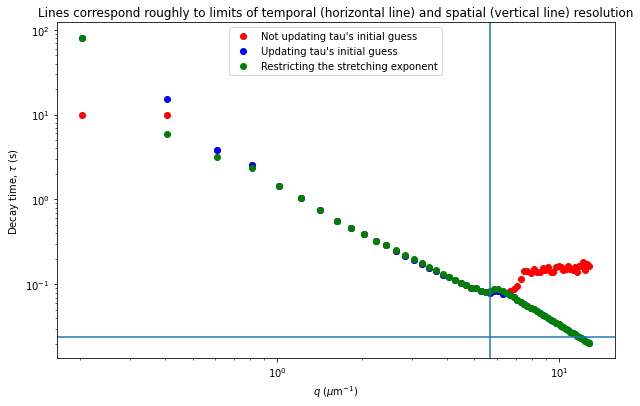

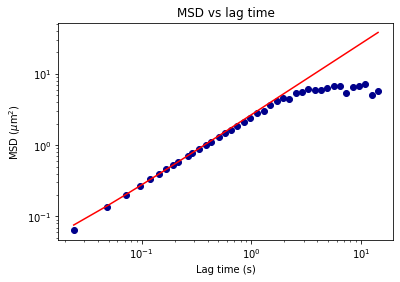

From the results of the fit, we can estimate a diffusion coefficient of about 0.6 \(\mu m^2 / s\). We determine this by looking at the characteristic decay time vs the wavevector (\(\tau\) vs \(q\)). On a log-log plot, power laws relationships between the two variables will come across as a straight line where the slope of the line is equal to the power. And, for this data, we see a clear power law show a relationship of \(\tau \propto q^{-2}\) which indicates diffusive motion. Note that our data deviates from this power law relationship for \(q\) greater than about 4 \(\mu m^{-1}\). This could be due to poor optical resolution limiting the high wavevectors (which correspond to small length scales). Or it could be due to not having the temporal resolution necessary to detect fast decay times. Lastly, it could also be due to poor fit performance. We could try to use an initial guess for \(\tau\) for each \(q\) that is based on an estimated diffusion coefficient. That is, when we fit \(D(q, \Delta t)\) (or \(f(q,\Delta t)\)), for each \(q\), we will calculate \(\tau_{guess} = 1 / (Dq^2)\) and use that as the initial guess in the fits. We do that below.

[17]:

fit02 = ddm_fit.fit(name_fit = 'fit02', display_table=False, use_A_from_images_as_guess=True, update_limits_on_A=True,

update_tau_based_on_estimated_diffcoeff=True, estimated_diffcoeff=0.6,

update_limits_on_tau=True, updated_lims_on_tau_fraction=1)

In function 'get_tau_vs_q_fit', using new tau...

Fit is saved in fittings dictionary with key 'fit02'.

[18]:

ddm.fit_report(fit02, q_indices=[3,6,9,22], forced_qs=[4,16], use_new_tau=False, show=True)

[18]:

<xarray.Dataset>

Dimensions: (parameter: 4, q: 64, lagtime: 40)

Coordinates:

* parameter (parameter) <U13 'Amplitude' 'Tau' ... 'StretchingExp'

* q (q) float64 0.0 0.2028 0.4057 0.6085 ... 12.37 12.58 12.78

* lagtime (lagtime) float64 0.02398 0.04796 0.07194 ... 12.59 14.36

Data variables:

parameters (parameter, q) float64 1.0 2.326e+05 9.894e+03 ... 0.5 0.5

theory (lagtime, q) float64 10.0 152.4 361.6 ... 202.0 201.7 201.6

isf_data (lagtime, q) float64 0.0 0.9996 0.9872 ... 0.432 0.4966

ddm_matrix_data (lagtime, q) float64 0.0 294.2 321.4 ... 207.8 201.1 200.4

A (q) float64 -190.0 2.585e+05 1.026e+04 ... 19.47 20.65

B int32 190

Attributes: (12/18)

model: DDM Matrix - Single Exponential

data_to_use: DDM Matrix

initial_params_dict: ["{'n': 0, 'value': 100.0, 'limits': [1.0...

effective_diffusion_coeff: 0.6584064996820138

tau_vs_q_slope: [-1.89886818]

msd_alpha: [1.053259]

... ...

DataDirectory: C:/Users/rmcgorty/Documents/GitHub/PyDDM/...

FileName: images_nobin_40x_128x128_8bit.tif

pixel_size: 0.242

frame_rate: 41.7

BackgroundMethod: None

OverlapMethod: 2- parameter: 4

- q: 64

- lagtime: 40

- parameter(parameter)<U13'Amplitude' ... 'StretchingExp'

array(['Amplitude', 'Tau', 'Background', 'StretchingExp'], dtype='<U13')

- q(q)float640.0 0.2028 0.4057 ... 12.58 12.78

array([ 0. , 0.20284 , 0.405681, 0.608521, 0.811362, 1.014202, 1.217043, 1.419883, 1.622723, 1.825564, 2.028404, 2.231245, 2.434085, 2.636926, 2.839766, 3.042607, 3.245447, 3.448287, 3.651128, 3.853968, 4.056809, 4.259649, 4.46249 , 4.66533 , 4.86817 , 5.071011, 5.273851, 5.476692, 5.679532, 5.882373, 6.085213, 6.288053, 6.490894, 6.693734, 6.896575, 7.099415, 7.302256, 7.505096, 7.707937, 7.910777, 8.113617, 8.316458, 8.519298, 8.722139, 8.924979, 9.12782 , 9.33066 , 9.5335 , 9.736341, 9.939181, 10.142022, 10.344862, 10.547703, 10.750543, 10.953383, 11.156224, 11.359064, 11.561905, 11.764745, 11.967586, 12.170426, 12.373267, 12.576107, 12.778947]) - lagtime(lagtime)float640.02398 0.04796 ... 12.59 14.36

array([ 0.023981, 0.047962, 0.071942, 0.095923, 0.119904, 0.143885, 0.167866, 0.191847, 0.215827, 0.263789, 0.28777 , 0.335731, 0.383693, 0.431655, 0.503597, 0.57554 , 0.647482, 0.743405, 0.863309, 0.983213, 1.127098, 1.294964, 1.486811, 1.702638, 1.942446, 2.206235, 2.541966, 2.901679, 3.309353, 3.788969, 4.316547, 4.940048, 5.659472, 6.450839, 7.386091, 8.441247, 9.640288, 11.007194, 12.589928, 14.364508])

- parameters(parameter, q)float641.0 2.326e+05 9.894e+03 ... 0.5 0.5

array([[1.00000000e+00, 2.32643716e+05, 9.89373199e+03, 1.04425914e+04, 1.65958403e+04, 1.87741626e+04, 2.01262063e+04, 2.02699350e+04, 1.93938141e+04, 2.14281346e+04, 2.33743051e+04, 2.74778140e+04, 3.34522708e+04, 3.95131276e+04, 4.17820422e+04, 4.20664111e+04, 4.07569060e+04, 3.57098235e+04, 2.84201318e+04, 2.06082054e+04, 1.43591503e+04, 9.28277724e+03, 5.65119988e+03, 3.47725293e+03, 2.06716941e+03, 1.22311564e+03, 8.76927351e+02, 6.90124615e+02, 5.35443212e+02, 3.89510499e+02, 3.09479117e+02, 2.84955892e+02, 2.38546284e+02, 1.83561135e+02, 1.54020959e+02, 1.19441061e+02, 1.01642851e+02, 8.38663118e+01, 7.64826062e+01, 7.29511472e+01, 6.40885844e+01, 6.39146298e+01, 5.76840329e+01, 5.29692932e+01, 4.95204305e+01, 4.46822213e+01, 4.45897527e+01, 3.86598345e+01, 3.70692807e+01, 3.68835355e+01, 3.35437912e+01, 3.23466041e+01, 3.04356863e+01, 3.22571323e+01, 2.99153772e+01, 2.80025225e+01, 2.81364364e+01, 2.61955246e+01, 2.64803840e+01, 2.41601609e+01, 2.22755742e+01, 2.26887470e+01, 2.14222352e+01, 2.27190297e+01], [1.00000000e+01, 8.10157916e+01, 1.53295774e+01, 3.86806746e+00, 2.55606574e+00, 1.42436448e+00, 1.03553582e+00, 7.42218942e-01, 5.52015441e-01, 4.65091456e-01, 3.96220640e-01, 3.21261107e-01, 2.90633158e-01, 2.48487749e-01, 2.13902731e-01, 1.92959728e-01, ... 1.75920784e+02, 1.75462434e+02, 1.77385444e+02, 1.77265516e+02, 1.78234387e+02, 1.76044620e+02, 1.76896189e+02, 1.77554782e+02, 1.77379772e+02, 1.78268340e+02, 1.78255143e+02, 1.79515166e+02, 1.79915464e+02, 1.79294718e+02, 1.80274342e+02, 1.78884768e+02], [1.10000000e+00, 1.10000000e+00, 5.13758578e-01, 7.10762358e-01, 8.11578193e-01, 9.09132572e-01, 9.11750832e-01, 9.52912637e-01, 9.57434030e-01, 9.79312823e-01, 9.69590566e-01, 1.00961966e+00, 1.01501443e+00, 1.04072569e+00, 1.03076437e+00, 1.03128894e+00, 1.04638759e+00, 1.04446796e+00, 1.04406777e+00, 1.05492850e+00, 1.03622512e+00, 1.02767912e+00, 1.01091421e+00, 9.75980332e-01, 9.19591679e-01, 9.11770096e-01, 8.60156128e-01, 8.59200858e-01, 7.98604406e-01, 7.94551000e-01, 7.81941080e-01, 7.57373234e-01, 7.63314258e-01, 7.43304844e-01, 7.05794318e-01, 6.59952326e-01, 6.13021408e-01, 5.87587545e-01, 5.74890649e-01, 5.32364098e-01, 5.32295111e-01, 5.18818644e-01, 5.05445433e-01, 5.02325262e-01, 5.10491451e-01, 5.00000000e-01, 5.05828686e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01, 5.00000000e-01]]) - theory(lagtime, q)float6410.0 152.4 361.6 ... 201.7 201.6

array([[1.00013109e+01, 1.52384616e+02, 3.61633798e+02, ..., 1.94039740e+02, 1.94324216e+02, 1.93918518e+02], [1.00028078e+01, 1.87321112e+02, 5.08207808e+02, ..., 1.96840289e+02, 1.96957227e+02, 1.96698370e+02], [1.00043826e+01, 2.24123965e+02, 6.19965793e+02, ..., 1.98299076e+02, 1.98319989e+02, 1.98127945e+02], ..., [1.06708801e+01, 2.46219857e+04, 5.64750065e+03, ..., 2.01983465e+02, 2.01696577e+02, 2.01603797e+02], [1.07242679e+01, 2.82783354e+04, 5.89647837e+03, ..., 2.01983465e+02, 2.01696577e+02, 2.01603797e+02], [1.07744986e+01, 3.23548994e+04, 6.14246430e+03, ..., 2.01983465e+02, 2.01696577e+02, 2.01603797e+02]]) - isf_data(lagtime, q)float640.0 0.9996 0.9872 ... 0.432 0.4966

array([[ 0. , 0.99959694, 0.9871915 , ..., 0.85778634, 0.82204821, 0.86604236], [ 0. , 0.99906358, 0.97066658, ..., 0.76497186, 0.7397658 , 0.78674034], [ 0. , 0.99866393, 0.95809464, ..., 0.70299025, 0.6810774 , 0.71946869], ..., [ 0. , 0.98539009, 0.4540191 , ..., 0.27275862, -0.09683656, -0.09489651], [ 0. , 0.98461401, 0.4867419 , ..., 0.32901286, 0.21002625, 0.13879852], [ 0. , 0.98635641, 0.42712011, ..., 0.13873015, 0.43195264, 0.49663365]]) - ddm_matrix_data(lagtime, q)float640.0 294.2 321.4 ... 201.1 200.4